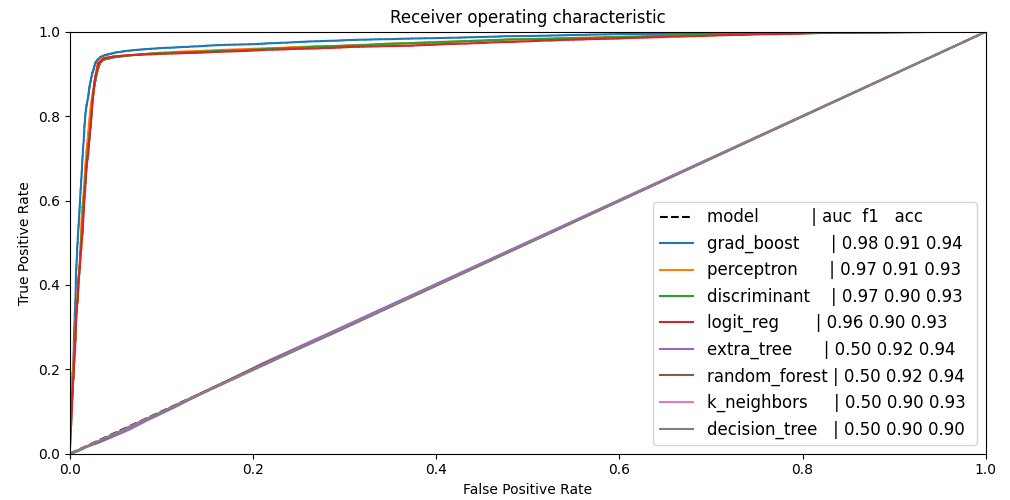

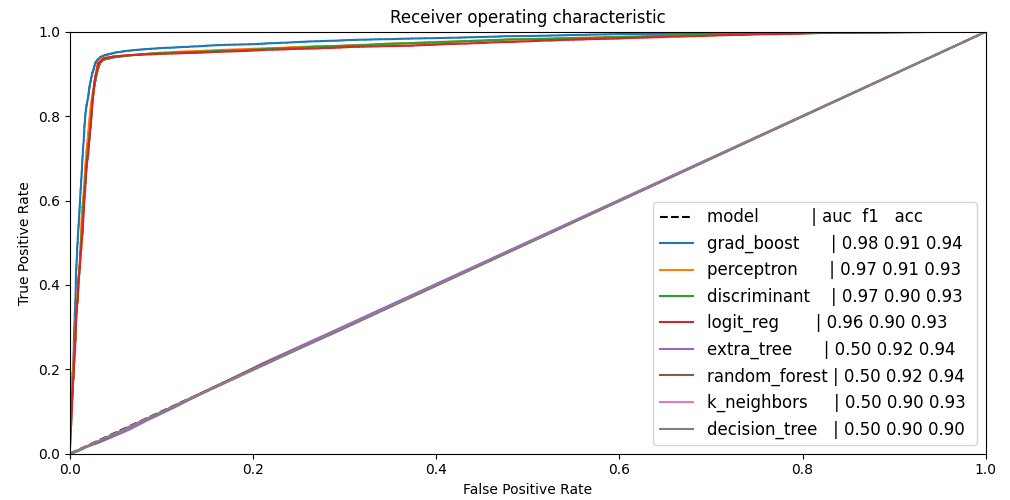

prediction on two classes

prediction on two classesDefinition and training of the models, feature importance and feature selection.

We want to distinguish the camera latency into different danger classes and be able to predict them depending on the other parameters.

If we use 2 or 5 classes the results are different

prediction on two classes

prediction on two classes

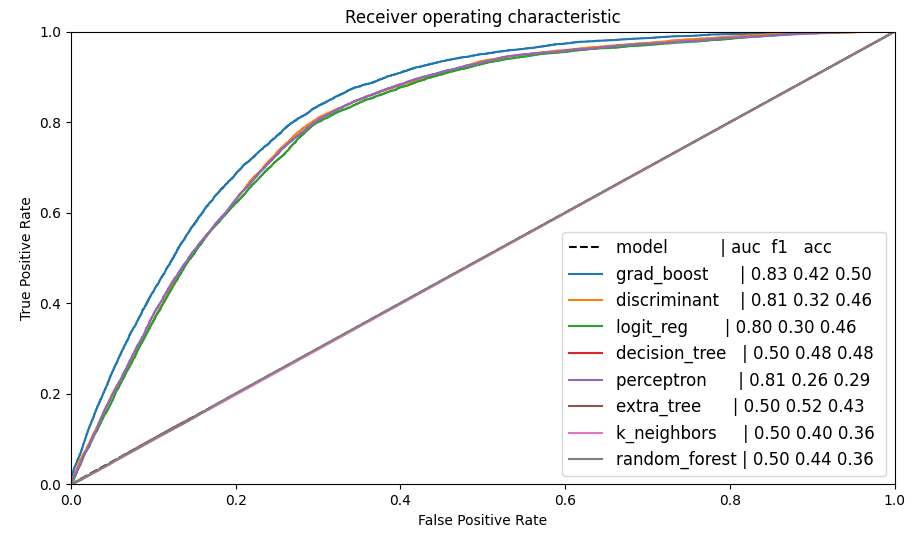

Perfomances decrese when we want to increase the number of classes

prediction on two classes

prediction on two classes

If we consider only the incident set we see that training is more difficult

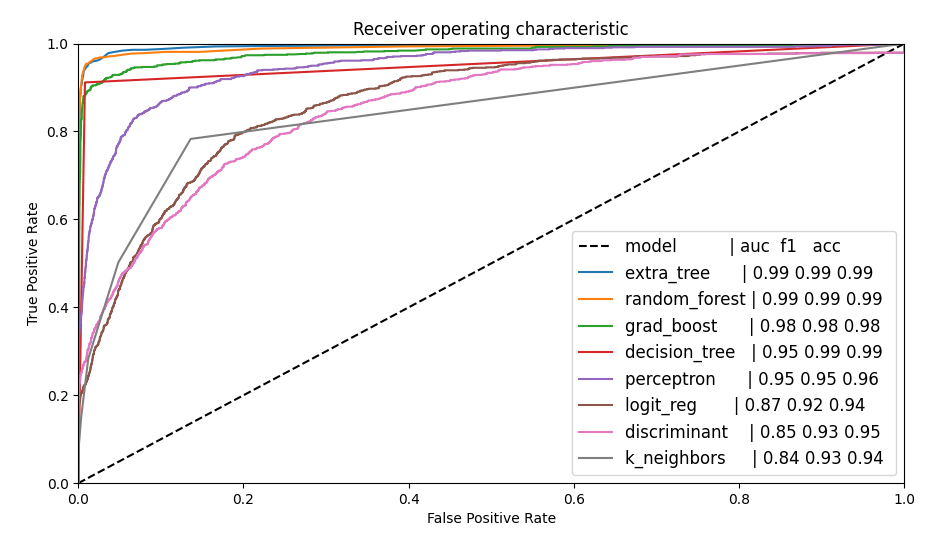

prediction of spikes on incident time series

prediction of spikes on incident time series

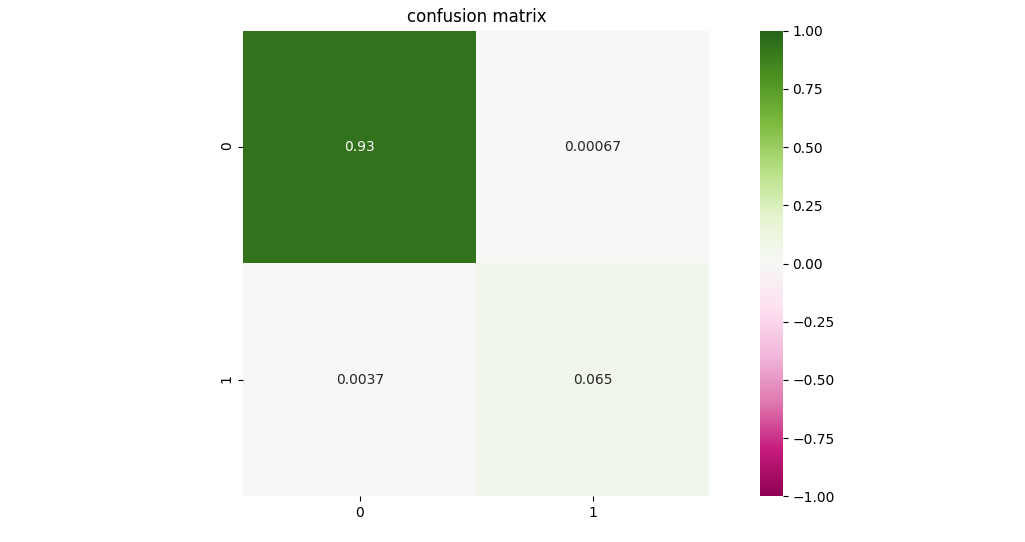

The confusion matrix shows really few counts outside of the diagonal

confusion matrix of the spike prediction

confusion matrix of the spike prediction

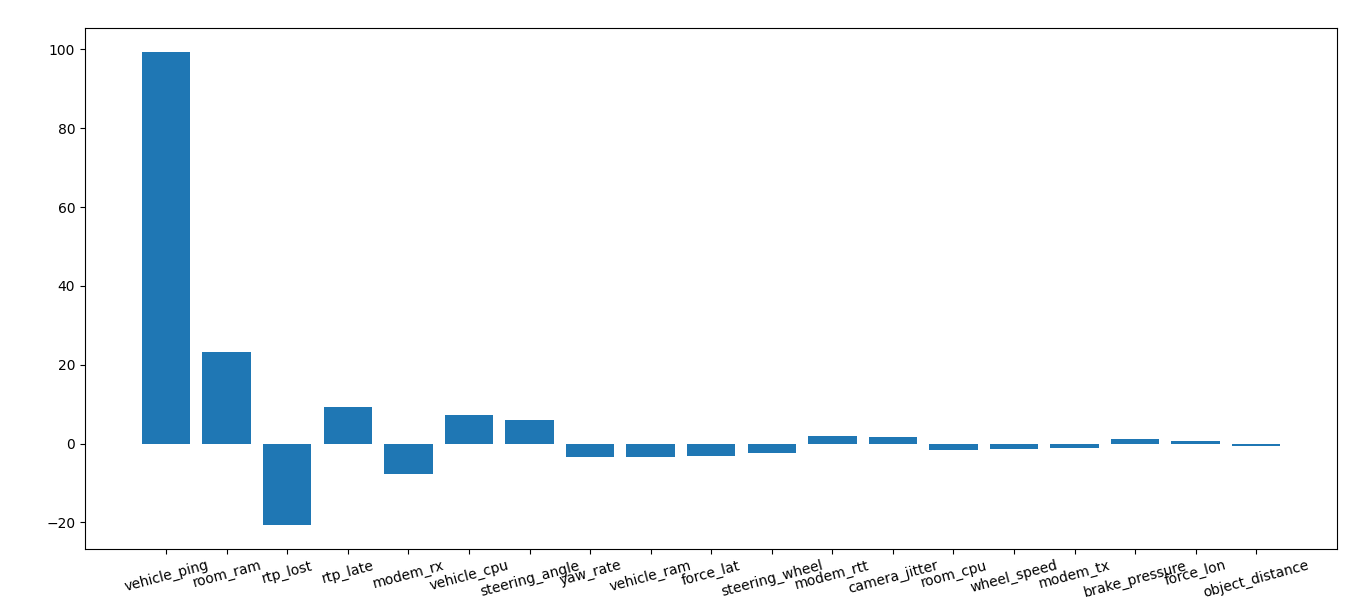

Iterating over different models we see different performances and feature importance. Despite differences we see that most of the model consider modem issues as the most relevant for spike prediction

feature importance according to

different models

feature importance according to

different models

Running a simple regression results are pretty clear

feature regression

feature regression

Code: train_spike.

We want to understand which are the most important predictors for camera latency.

We create the most simple regressor as baseline model to set the reference performance to test other models

model = Sequential()

model.add(Dense(layer[0],input_shape=(n_feat,),kernel_initializer='normal',activation='relu'))

model.add(Dense(1, kernel_initializer='normal'))

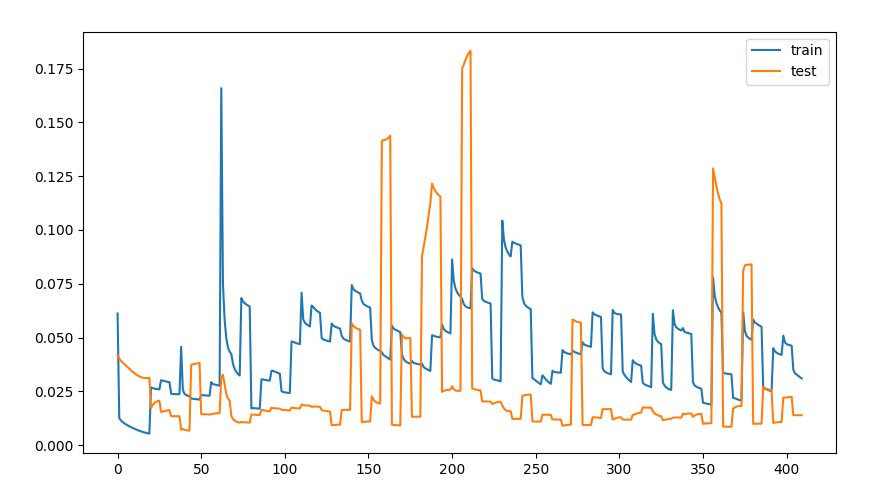

model.compile(loss='mean_squared_error', optimizer='adam')We train the model on the complete dataset and than iterate for each single series

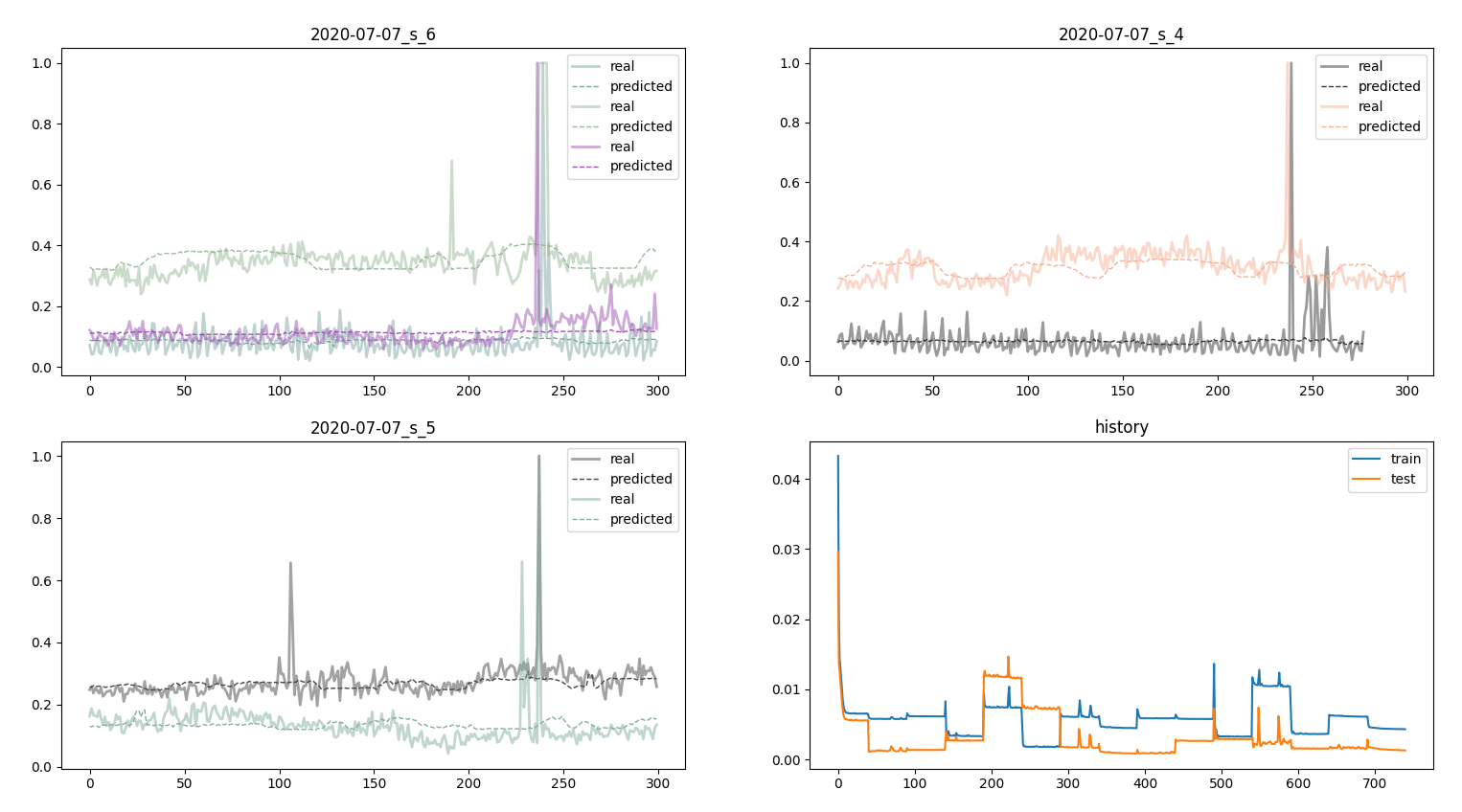

performance of the baseline

model

performance of the baseline

model

We see that in the baseline model only a peak is predicted.

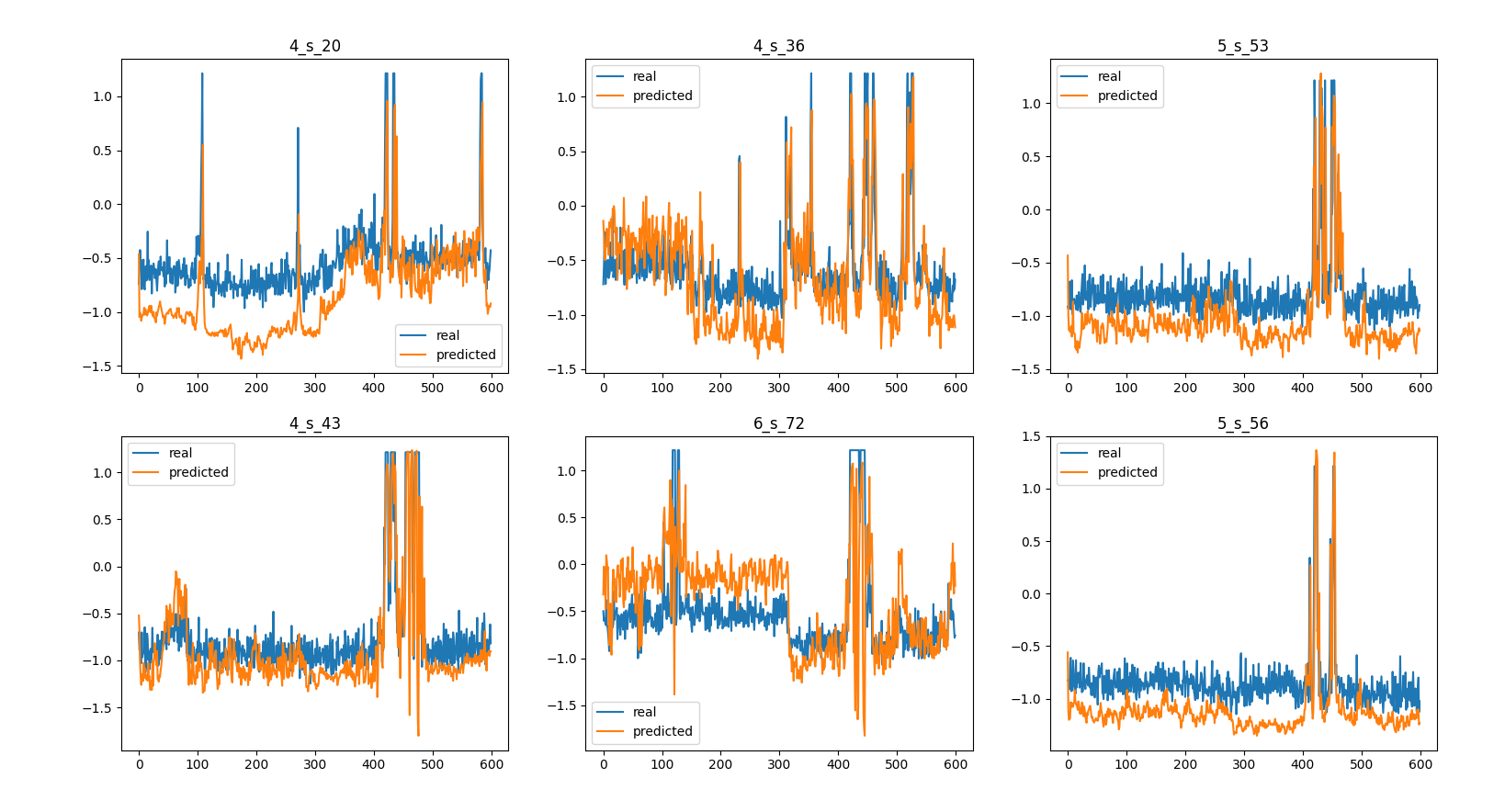

Performance of a three layers network

on incident data

Performance of a three layers network

on incident data

With a deeper network few peaks more are detected.

Code: feature_etl.

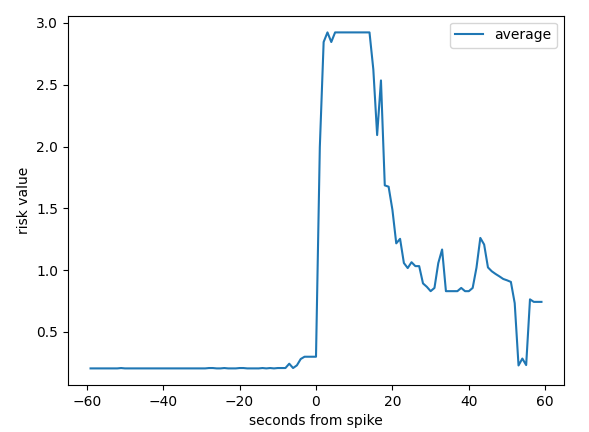

We want to calculate the spike risk predicting the

time_to_spike from the other features and calculate the

risk of an upcoming spike. We first train on the denoised average

prediction deviation

prediction deviation

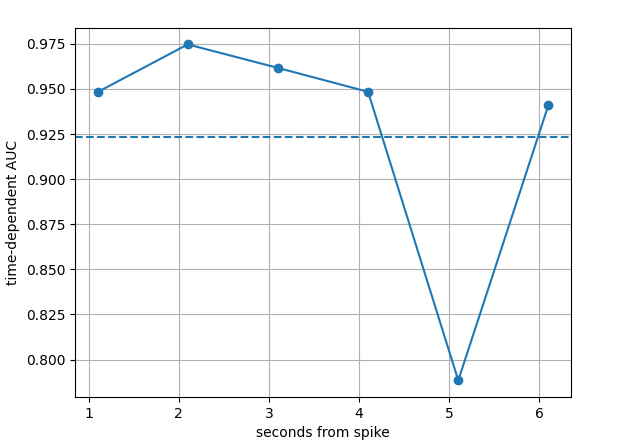

We than apply a Cox-Breslow survival estimator to calculate the cumulative dynamic AUC

AUC

on Cow Breslow

AUC

on Cow Breslow

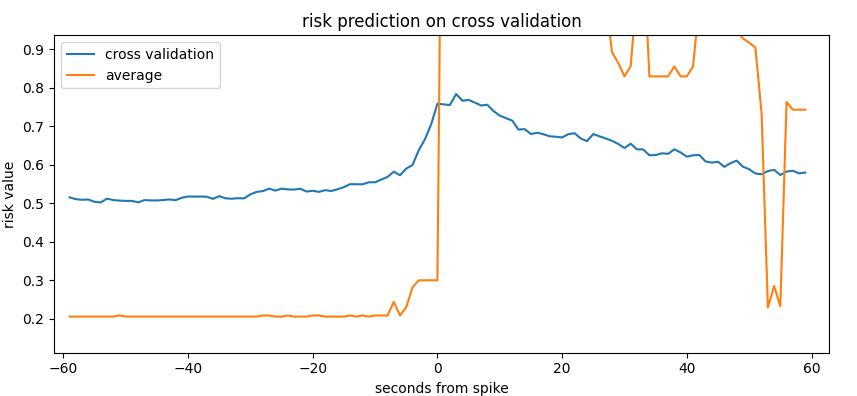

We perform than cross validation traning the model on a series and predicting on another one, we see that the cross validation tends towards the average prediction

risk prediction on cross validation

risk prediction on cross validation

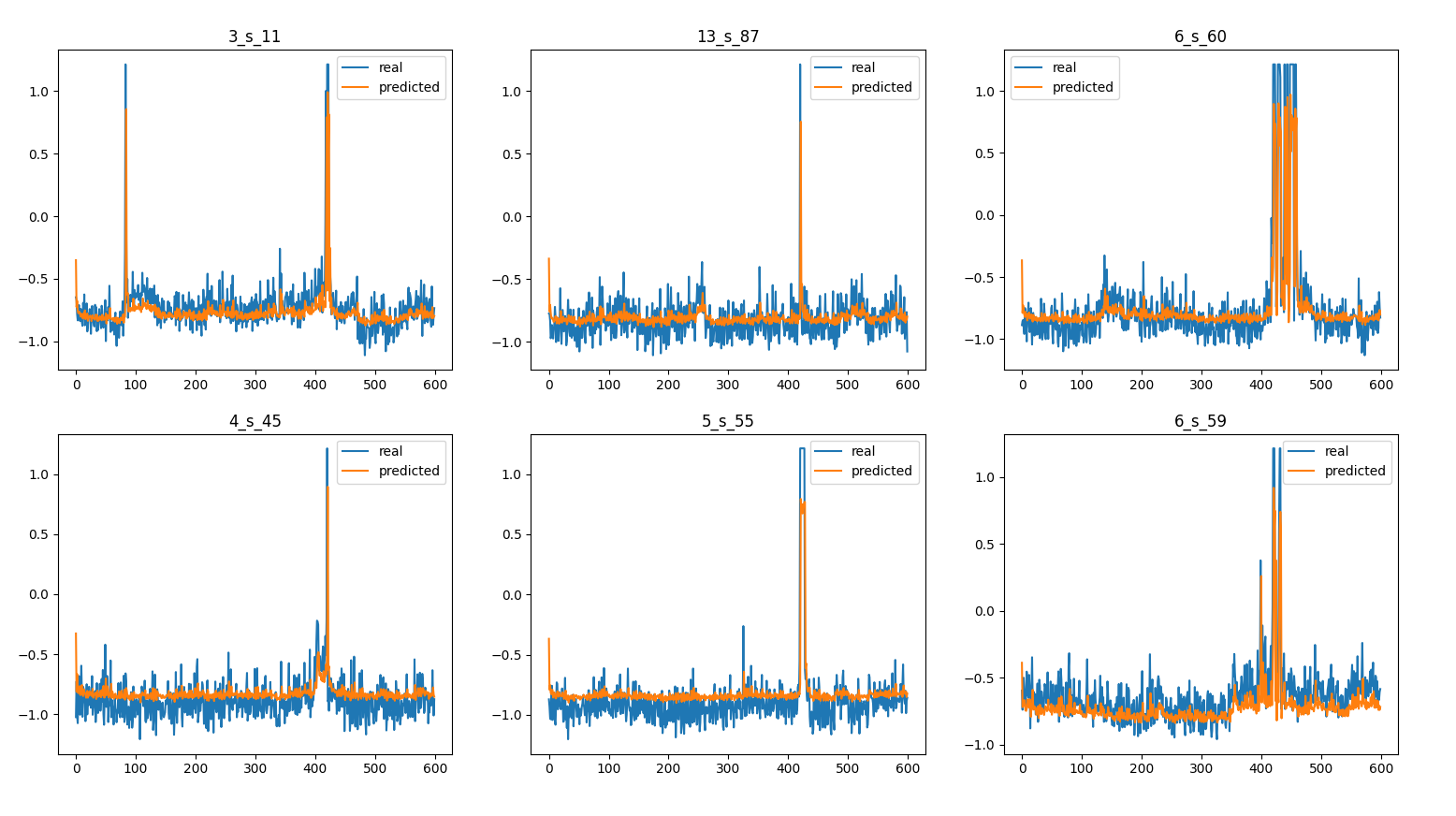

We first perform a lmts on the camera_latency itself. We

perform 20 epochs on the average and than 2 epochs on each single

series

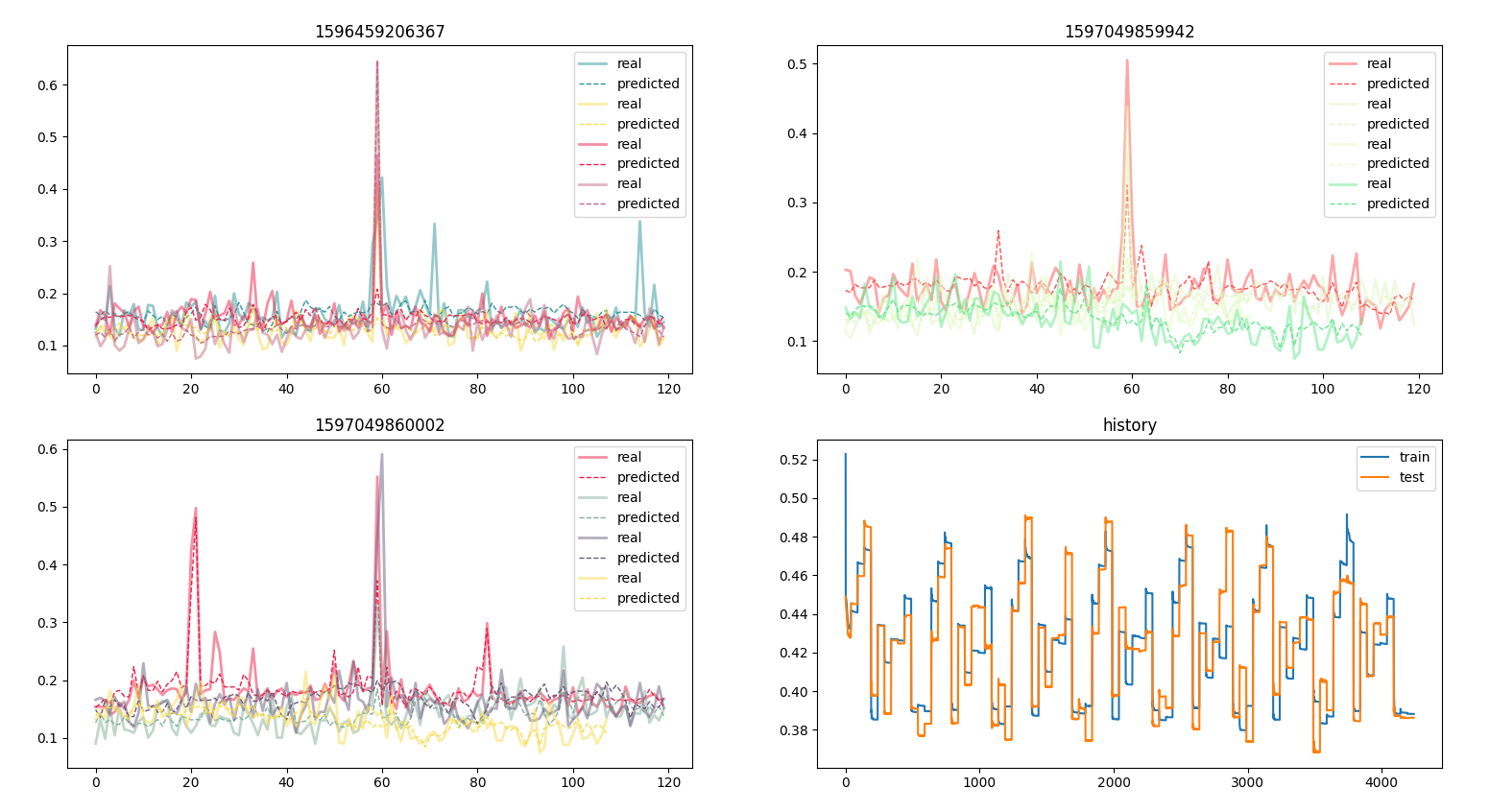

different example series on spike forecast

different example series on spike forecast

We see that the learning is pretty unregular

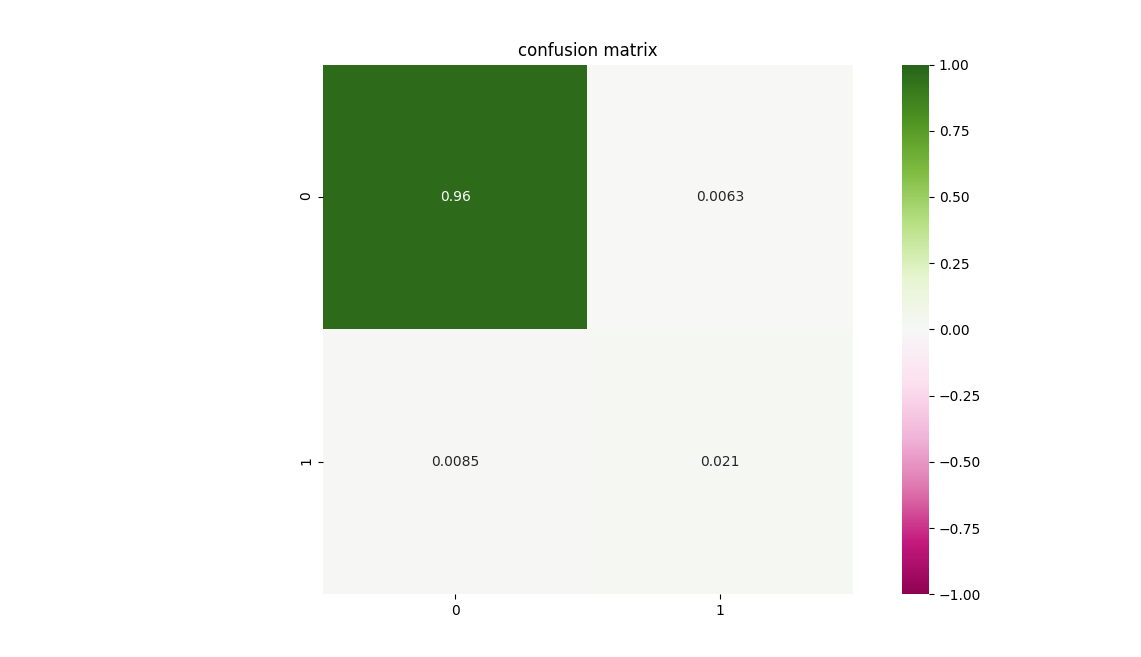

We than check how many spkies we can forecast

confusion matrix on forecasted

spikes

confusion matrix on forecasted

spikes

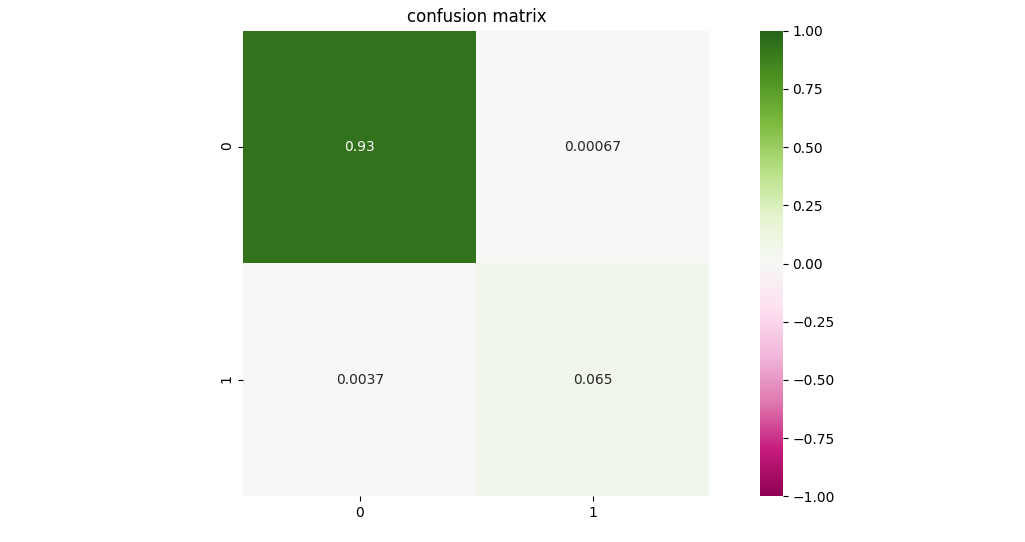

We finally perform a forecast only with networking features

forecast with networking features

forecast with networking features

and calculate the final confusion matrix

confusion matrix on forecasted spikes

with networking features

confusion matrix on forecasted spikes

with networking features