Agents compensation is a crucial stimulus for improving individual and company objectives.

compensation models

code: target_rateCard

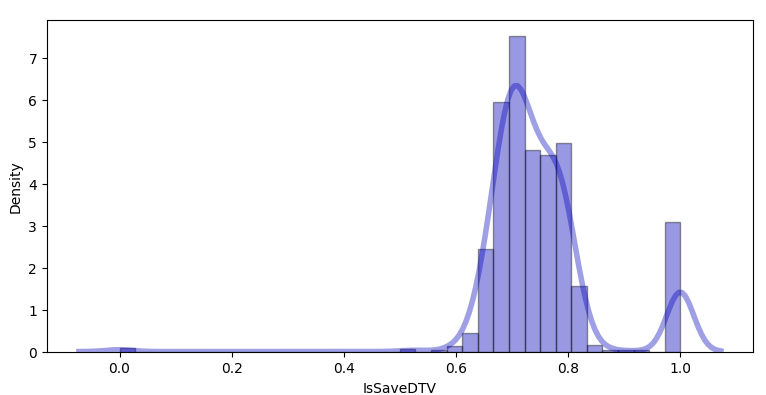

The usual agent target is the save rate

overall save rate

overall save rate

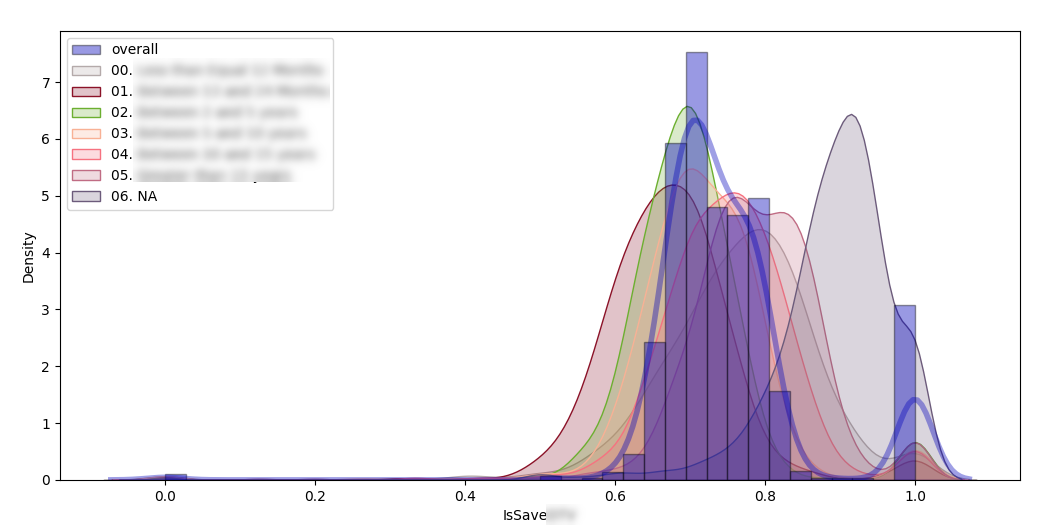

We actually know that there are many factors influencing the save rate

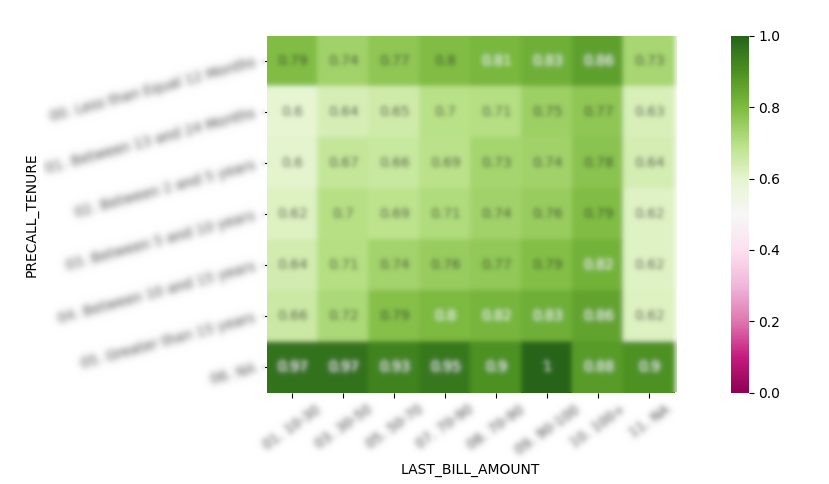

save rate depending on customer tenure

save rate depending on customer tenure

The problem can be extended to multidimension

3d representation of the save rate

code: target_etl query: sky

We first extract the data running the query on the client machine and extract the saving rate and the agent/user data.

We have few features describing the customer, the agent group and the call type. Most of the data are in buckets which we turn into continous variables

We than convert categorical features into continous variables to improve the model performance.

last_bill_amount is substitued by the upper boundprecall_tenure is substituted by the interval meanpackage hierachical treecategory one hot encodingcode: target_stat

We have few features to predict the success rate and we analyze the quality of the input data

We have missing values across all features, we need to do a typecast to allow python handling the different kind of data.

Data are narrow distributed and we don’t replace/exclude outliers neither perform a transformation on data

We start investigating the customer dimensions

save rate depending on last bill and

tenure

save rate depending on last bill and

tenure

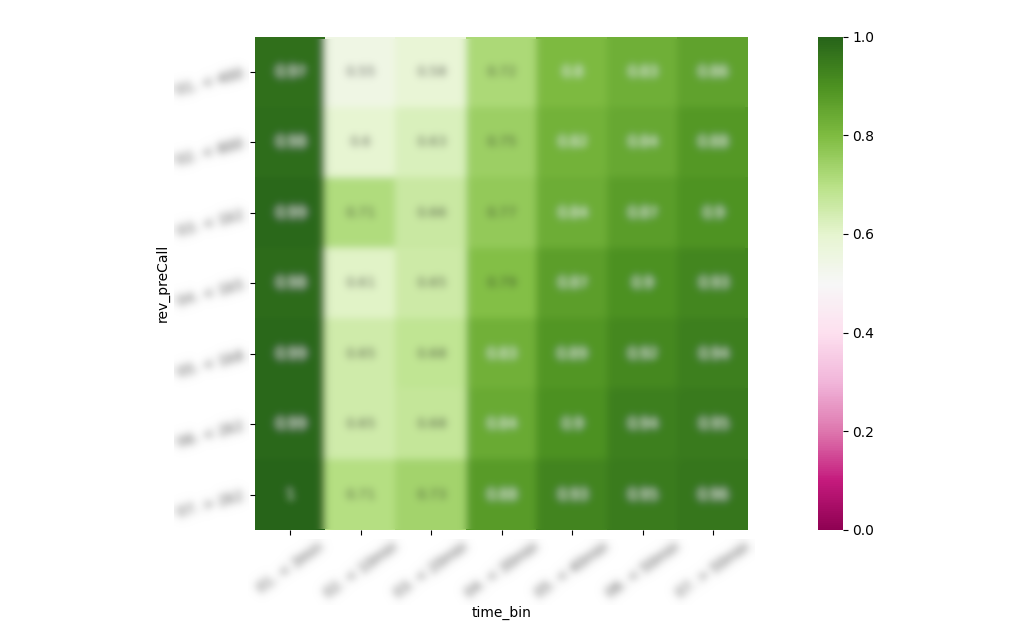

We see a dependence on call time but we consider it more as an effect than a cause of save rate

save rate depending on call time and customer revenue

save rate depending on call time and customer revenue

We can narrow down on many different levels and we will always see a feature contribution

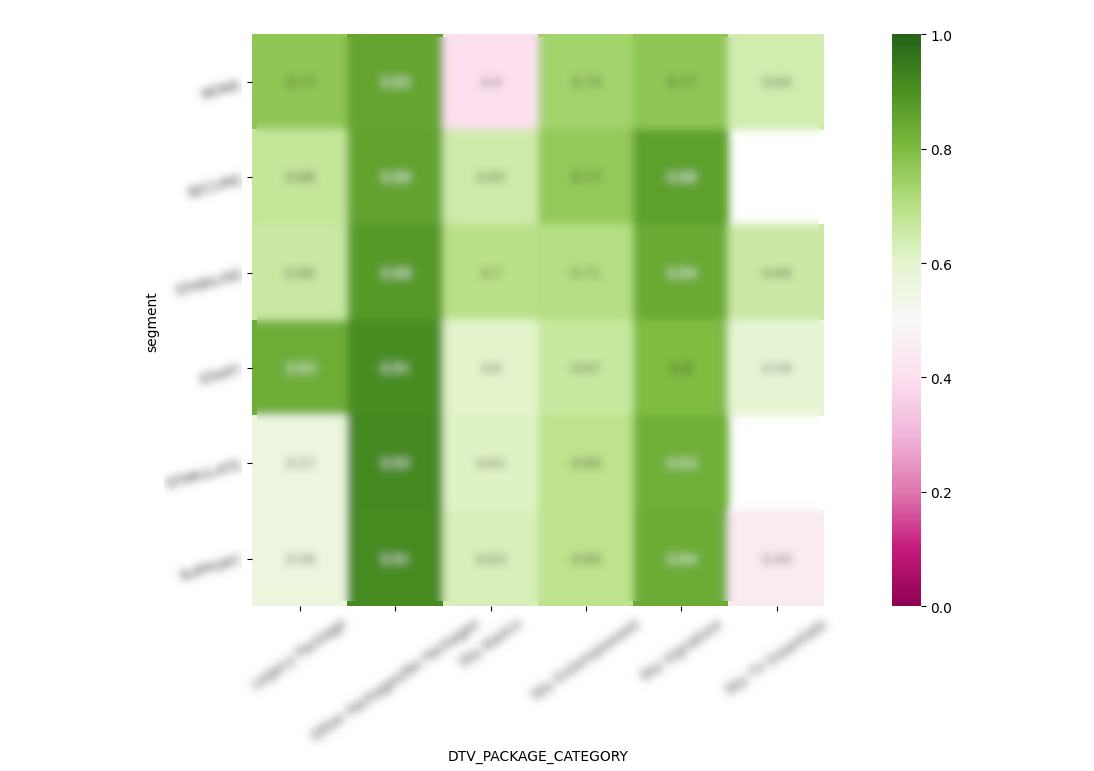

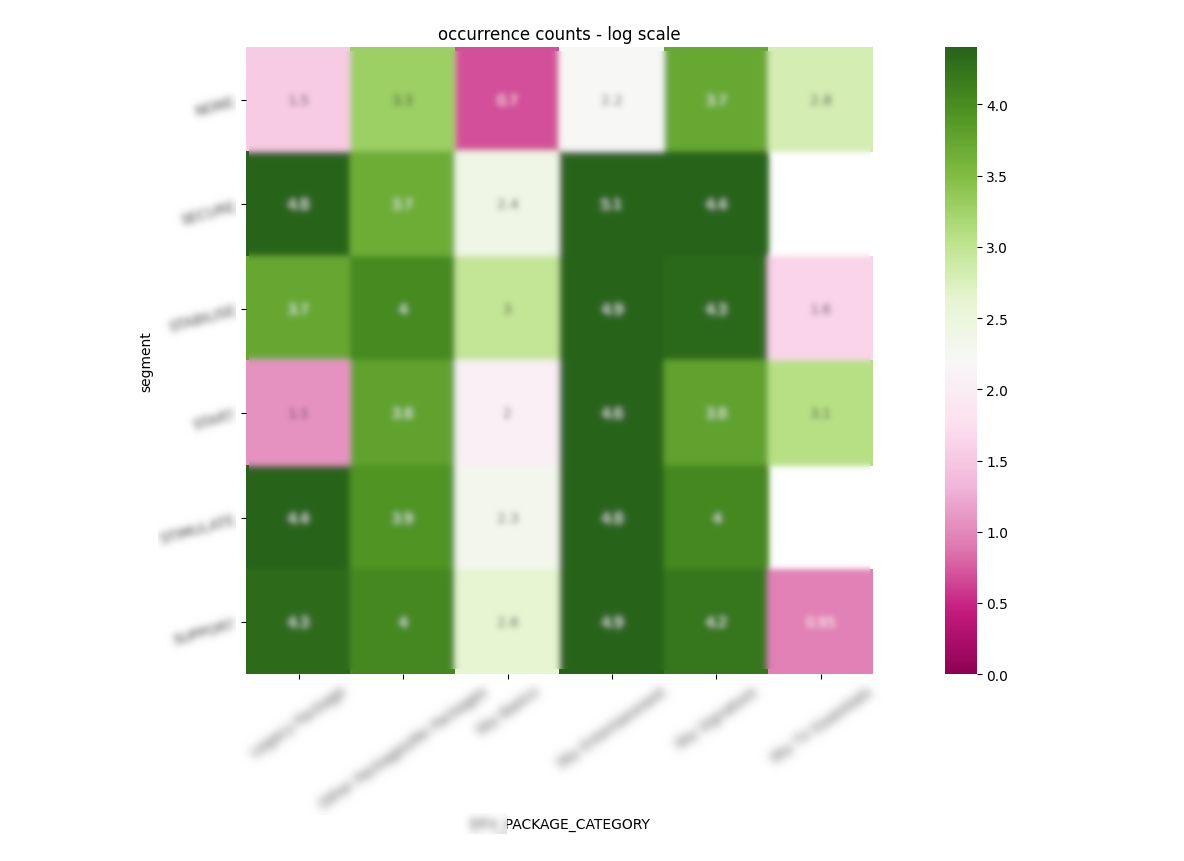

save rate depending on segments and product categories

save rate depending on segments and product categories

taking into account that low values will be discarded or clamped into larger buckets

save rate depending on segments and product

categories

save rate depending on segments and product

categories

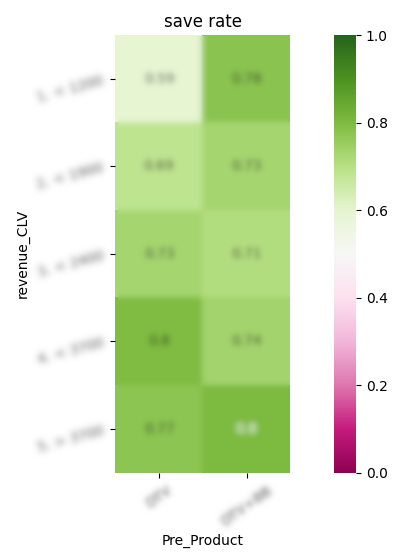

We finally propose the following save rate for the rate card because in connects with the revenue stream and the product development

save rate depending on customer value and product holding

save rate depending on customer value and product holding

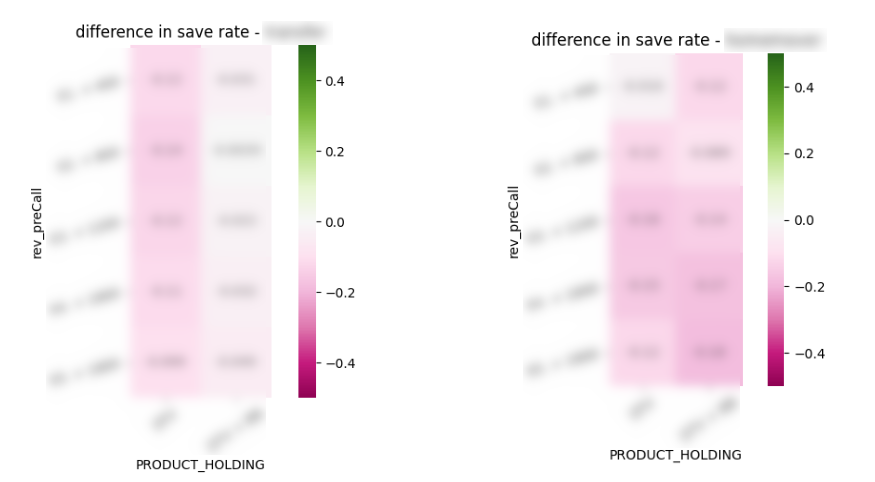

We see that other variables are implicitely modeled by the rate card

variables implicitely defined by existing variables

variables implicitely defined by existing variables

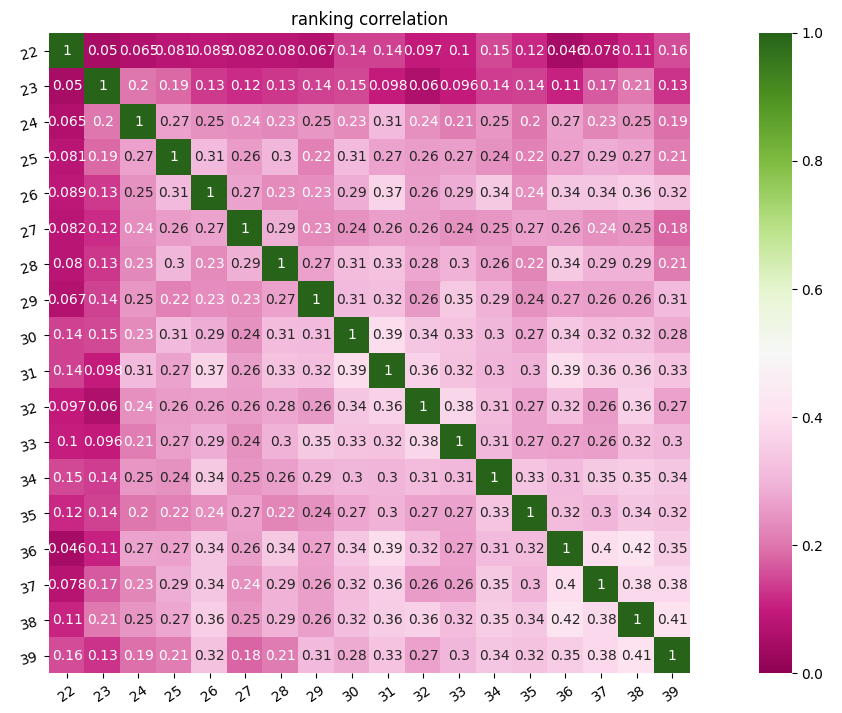

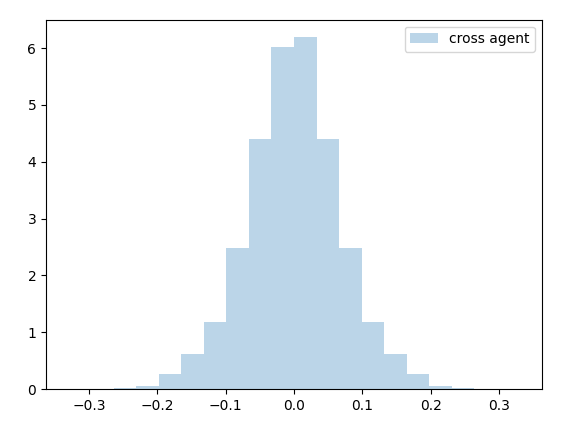

Is there a bias in homemover and no transfer calls where a group of agents gets an un even distribution of user groups? We test it calculating the share of call groups for each agent for each week and calculate the ranking distribution

ranking from week to week

ranking from week to week

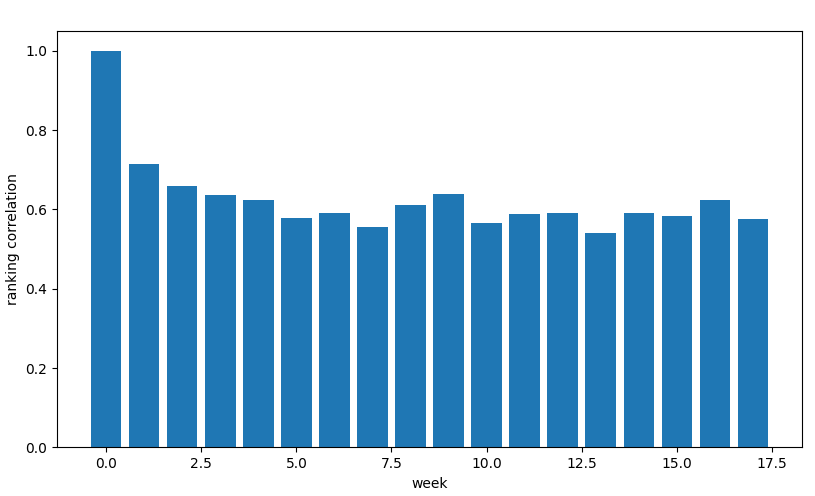

The ranking correlation drops after few weeks

decay of autocorrelation over

weeks

decay of autocorrelation over

weeks

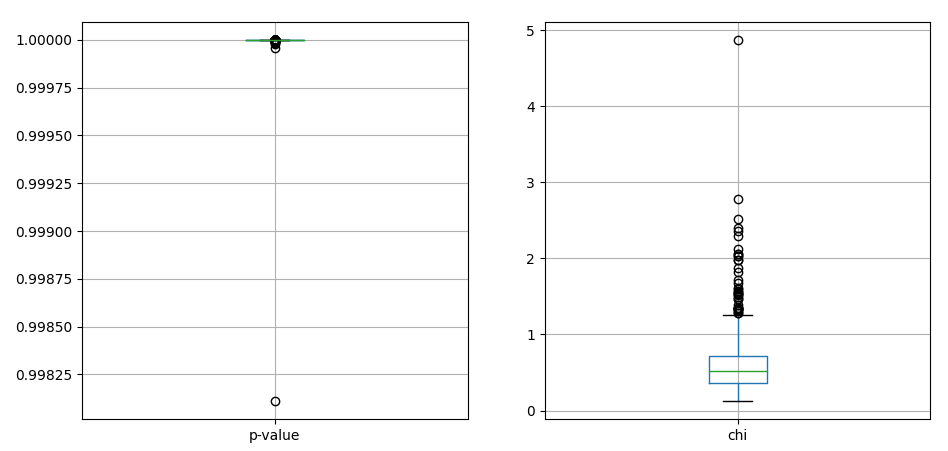

We test the chi square for the agent series compared with the average

chi values for agent call distribution

chi values for agent call distribution

We see that the chi values are pretty small and the p-values really high

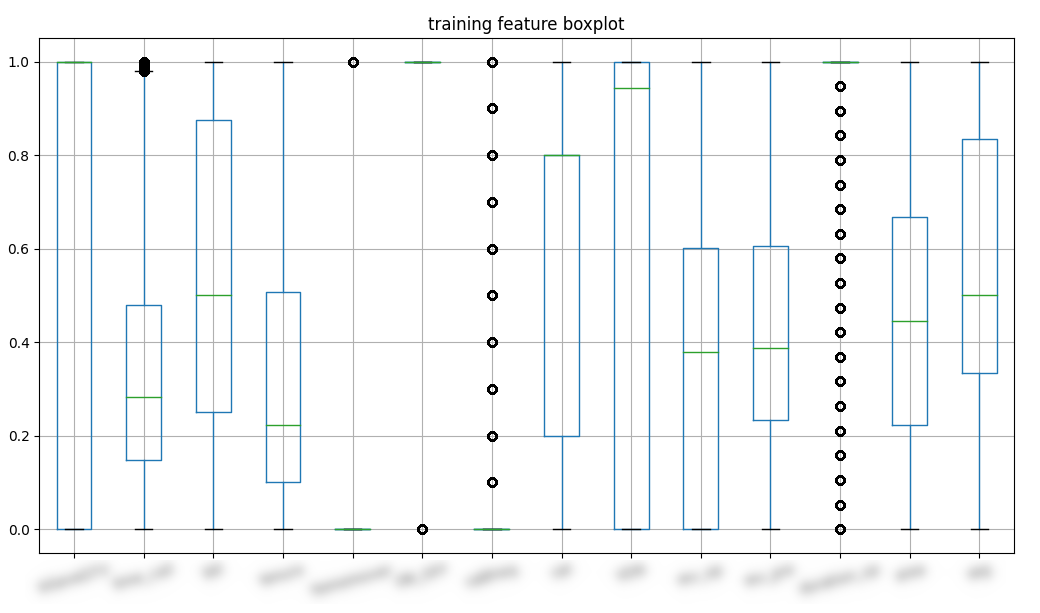

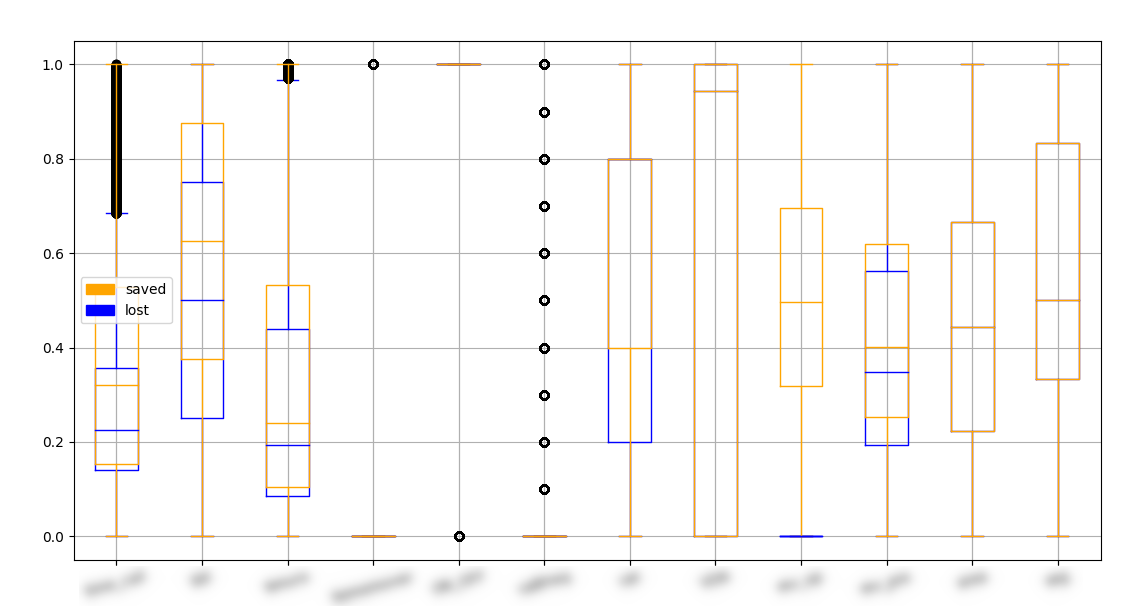

We analyze the distribution of features (normalized) and compare the variances

boxplot of normalized features

boxplot of normalized features

Most of the values are discretized

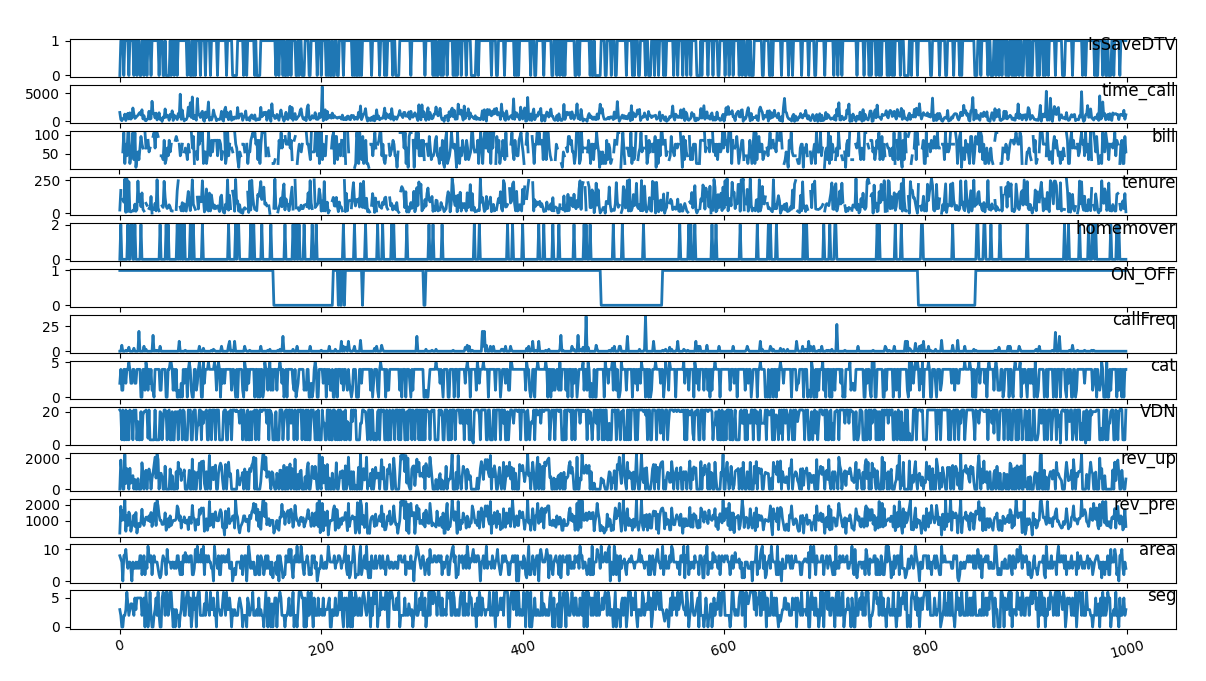

time series of features

time series of features

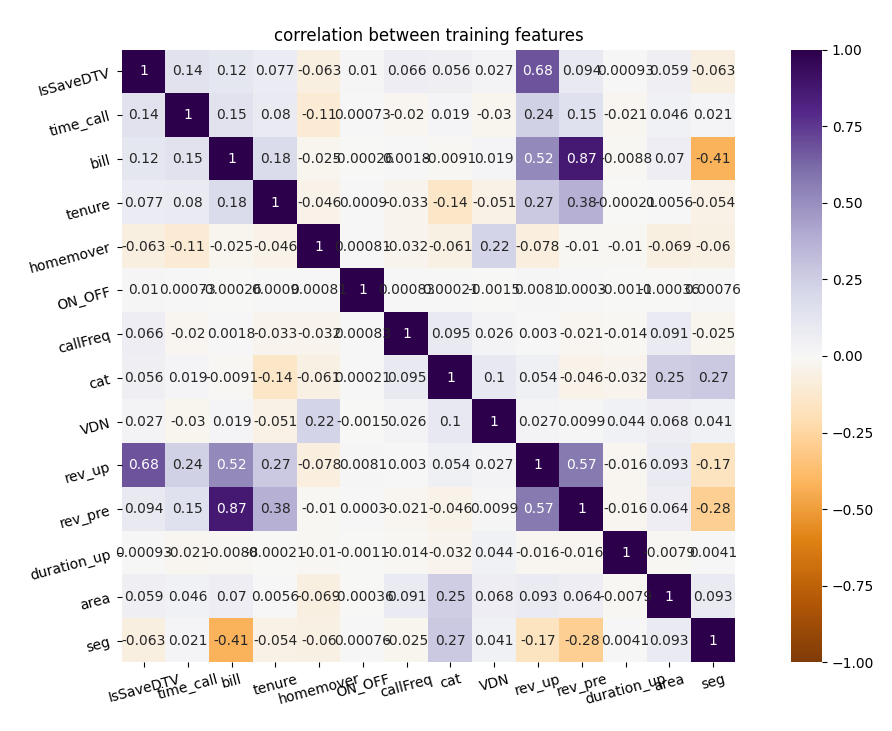

We see that this feature set don’t have any internal correlation

correlation of features

correlation of features

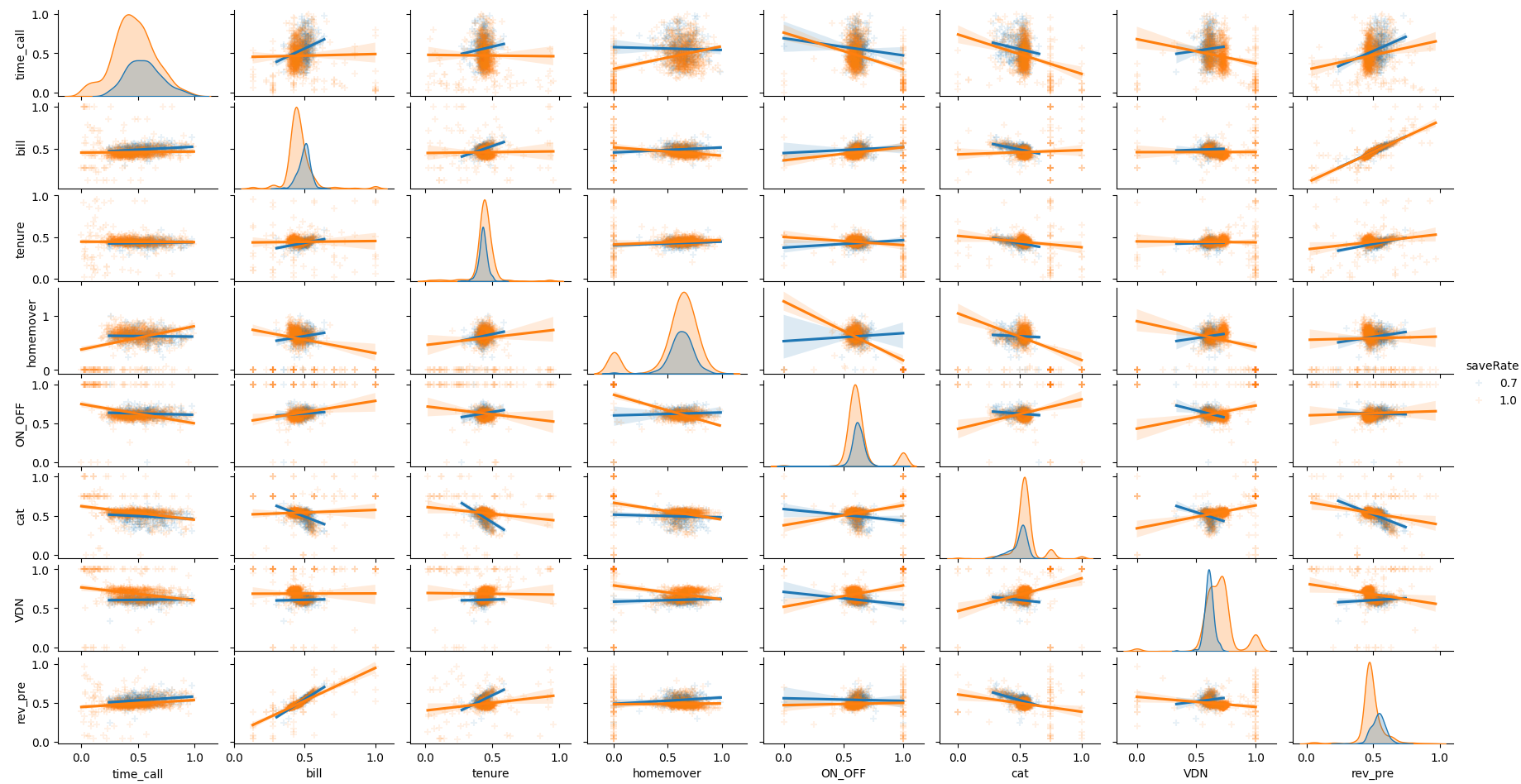

We display a pairplot stressing the different distribution depending

on the save rate. We see a clear difference in distribution across all

features and especially different regression depending on

last_bill_amount and precall_tenure

pairplot of features depending on success

pairplot of features depending on success

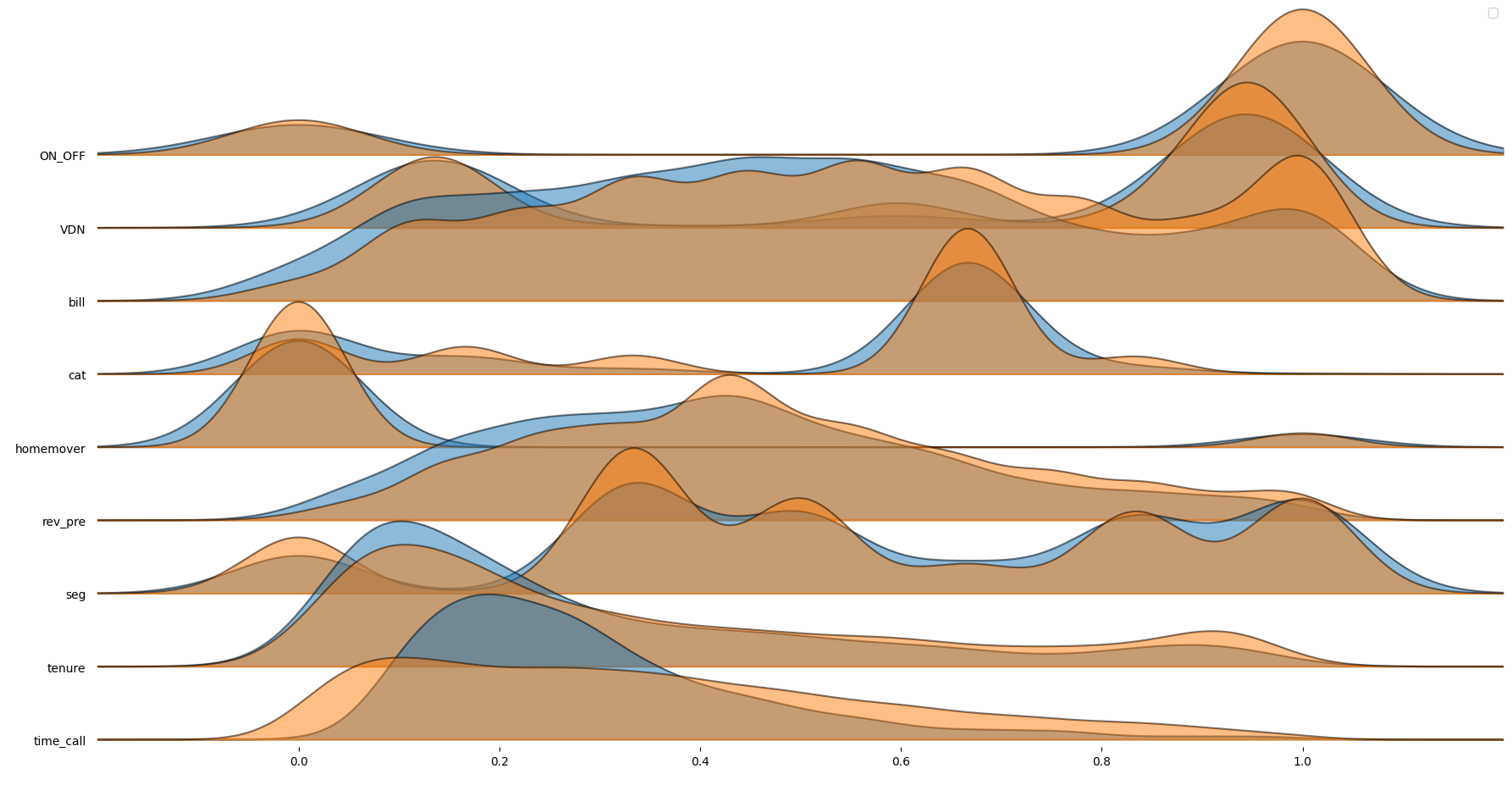

A close up explains which features are important for the prediction

joyplot for the most relevant features

joyplot for the most relevant features

We can say a slight difference in distribution in the different success subsets

feature on success

feature on success

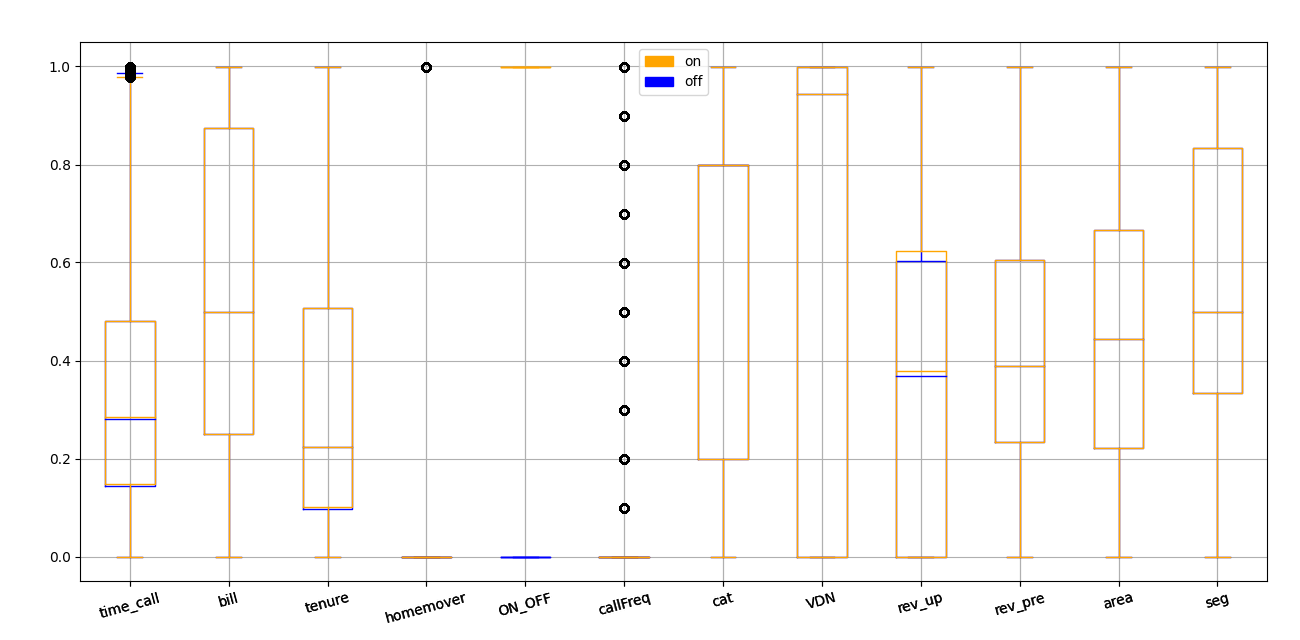

We see that the afiniti engine is slightly increasing the call time

feature

on afiniti on

feature

on afiniti on

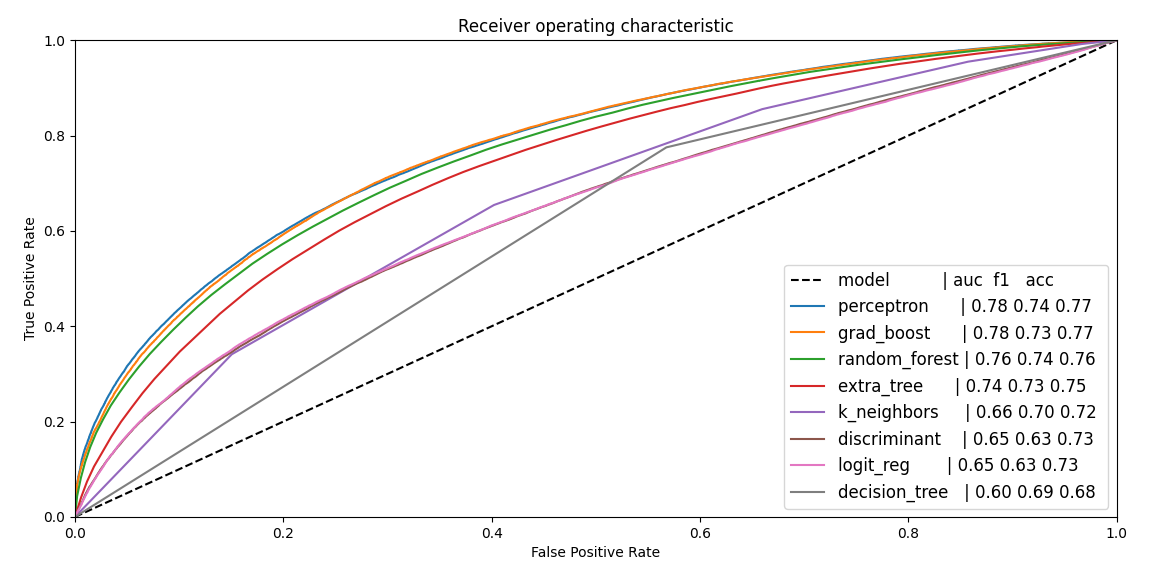

We iterate over different models to calculate the prediction strength of this feature set. We see that performances are not high

prediction of save rate

prediction of save rate

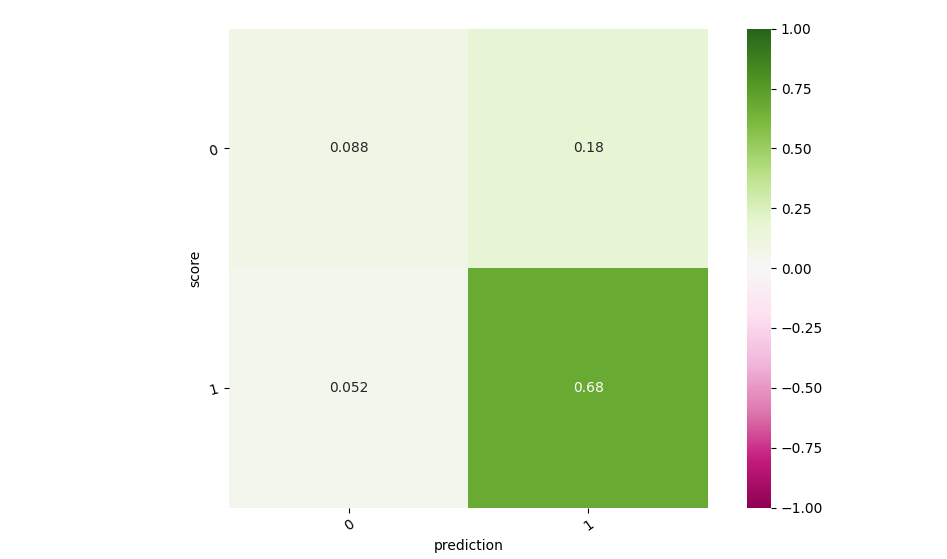

We see a larger number of false positive which tell us the difficulty to understand for a customer to cancel the contract

confusion matrix on save rate prediction

confusion matrix on save rate prediction

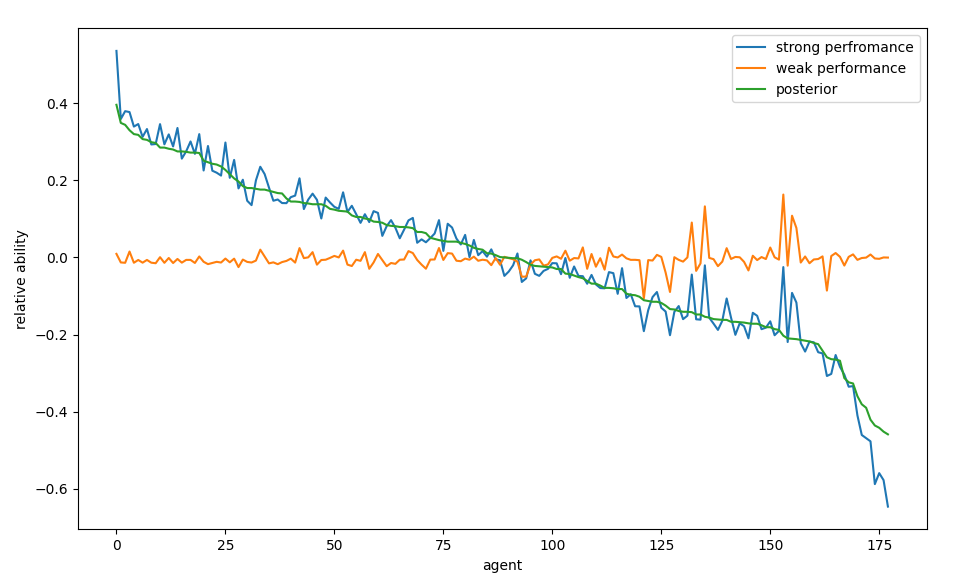

We know that agent skill is a really important variable to model but we have few information on agent data to model such a variable and we hence treat it as a latent variable.

To assess the relative ability we imagine the agents being on a tournament, the customer is the referee and the game pitch is the area and the product.

agent ability simulating a match between agents

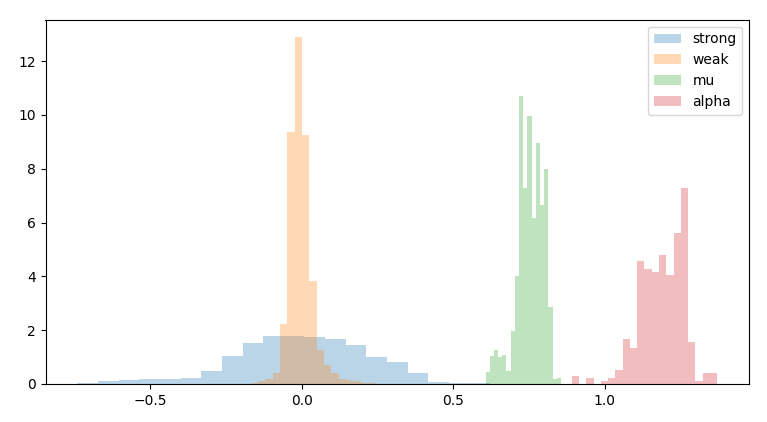

Based on the historical series we run a stan simulation based on a prior ability where we compare the agent performances on the different game pitches

prior of the agent ability on area and product

prior of the agent ability on area and product

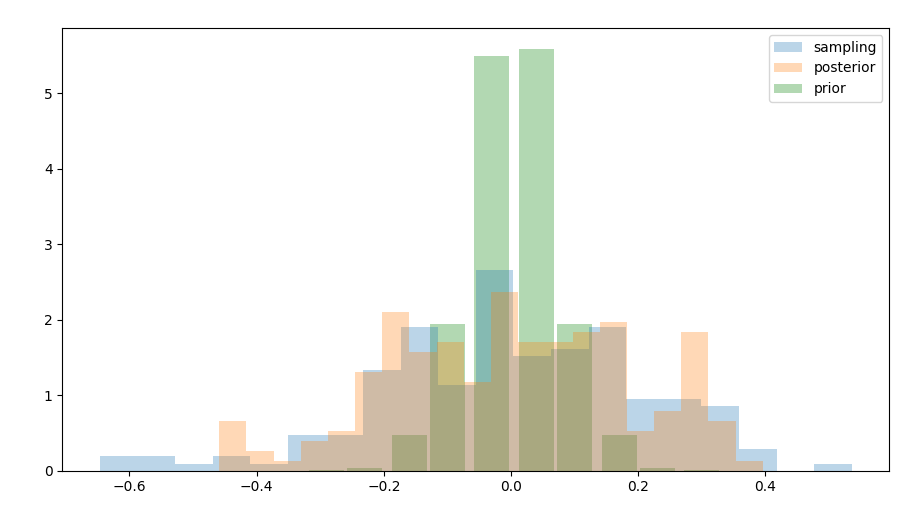

Once we get the prior distribution we run a simulation across all the agents over multiple games

posterior of the agent ability on area

and product

posterior of the agent ability on area

and product

and we create a ranking

posterior of the agent ability on area

and product

posterior of the agent ability on area

and product

We than simulate a series of matches and we finally calculate the posterior agent ability

posterior of the agent ability on area

and product

posterior of the agent ability on area

and product

Following game theory for a given set of rules the compensation implies a strategy and therefore the tuning of the compensation is crucial for performances.

game theory sketch

We need to balance between the individual and the company compensation:

We consider all users equal and the agent compensation is based on the saving rate. We consider a probability of saving rate of 73% +/- 22%:

Each pair user/agent has a different success probability, what if the compensation would be based on the success probability increase

Users have a different value depending on their records, the value is weighted by the previous bill and the tenure:

We have a list of users, each defined by the tenure, the bill amount, the product selection and the queue of the call

definition of users based on tenure, bill value, product selection

and queue

We have a list of agents defined by the certifications, the tenure, the knowledge of the product and the queue they work in

specification of agents

We can estimate the probability of success of the call based on few information, we actually see that the retention of the customer is easier for high value customers

the success of the call depends on few

features

Once a user calls the success probability depends on the type of agent joining the call

user/agent success probability

We predict the value of the customer in case of a successful call based on historical data

estimated customer value after retention

We propose parallel simulations where the agent is incentivised on different compensations which will imply different strategies and different achivements of company and personal goals

agent strategey based on

compensation

code: sim_compensation.py

We create a simulation where we:

simulation details

The compensations are:

Plus we simulate the effect of the afiniti engine on pairing users and agents:

We suppose each agent has persuasive skills which can influence the outcome of the call. We give to the agent the same persuasive strength independent on the compensation but proportional to the compensation

Display during the call with prediction box and compensation in case

of success

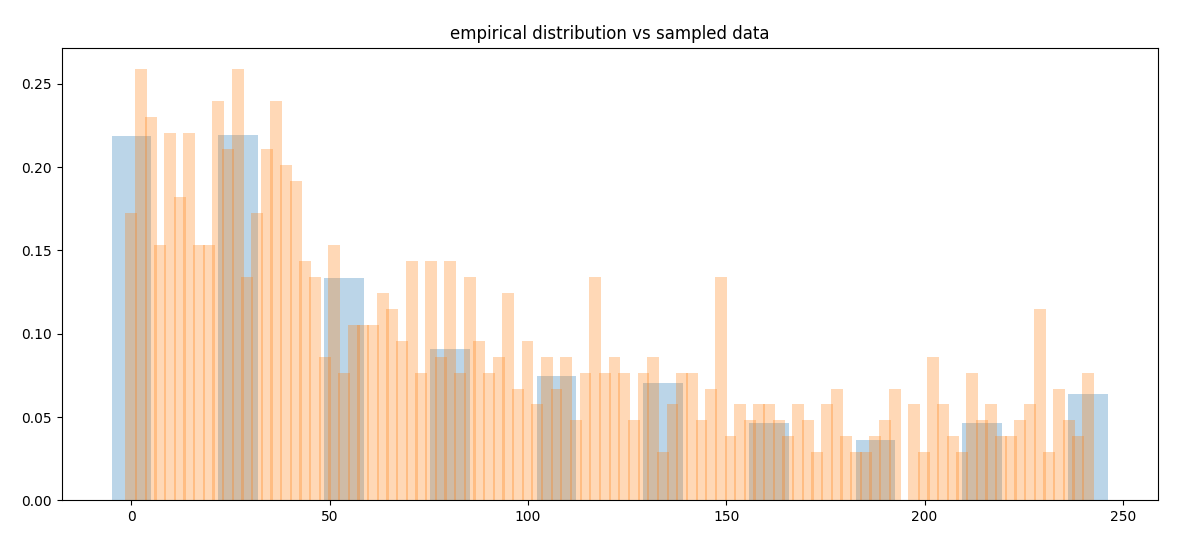

We create a set of users and agents following empirical distributions:

empirical and simulated distribution of last bill

empirical and simulated distribution of last bill

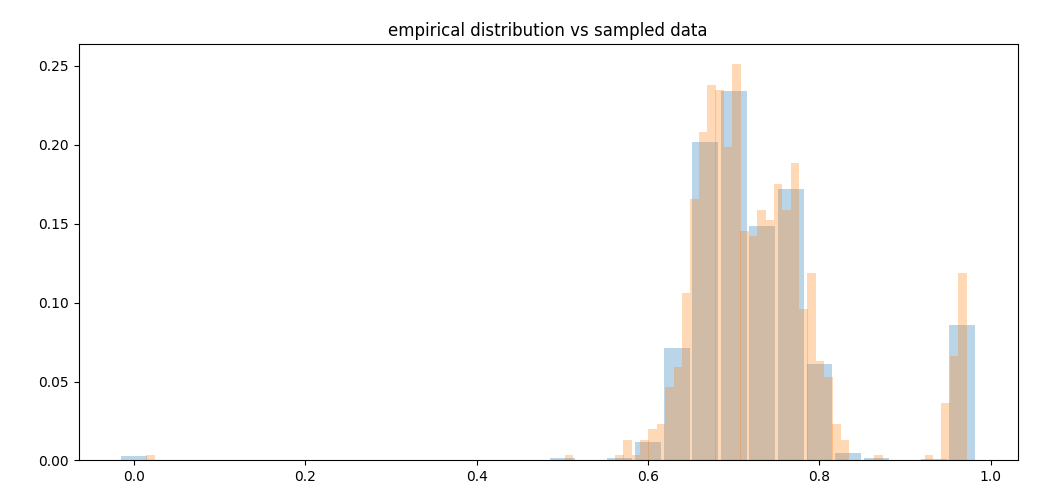

For last bill, tenure, and save rate

empirical and simulated distribution of save rate

empirical and simulated distribution of save rate

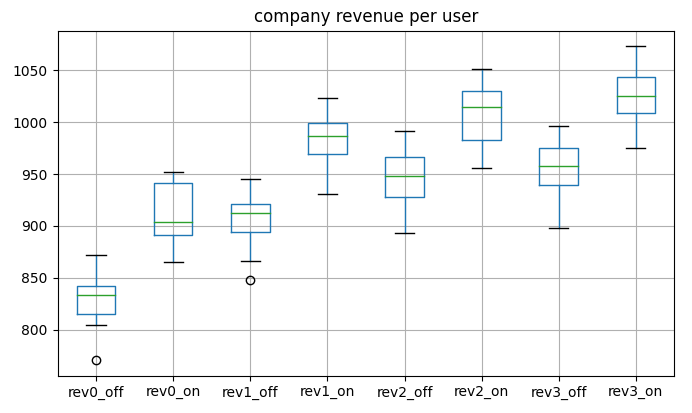

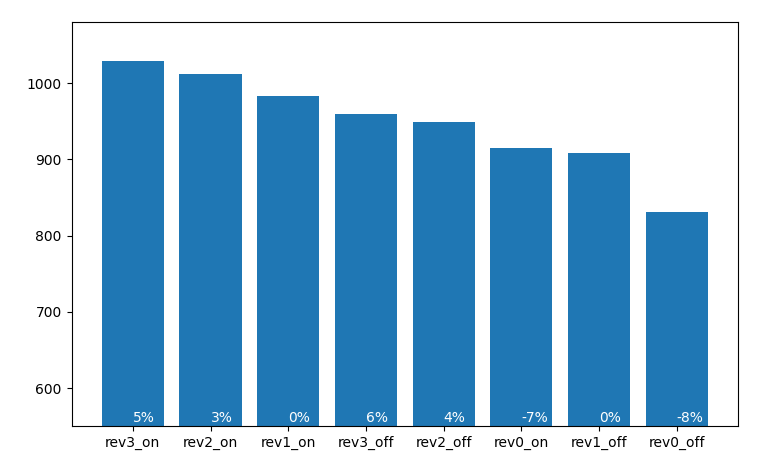

We see comparing to different strategies how the company revenue increases

revenue company in different scenarios

revenue company in different scenarios

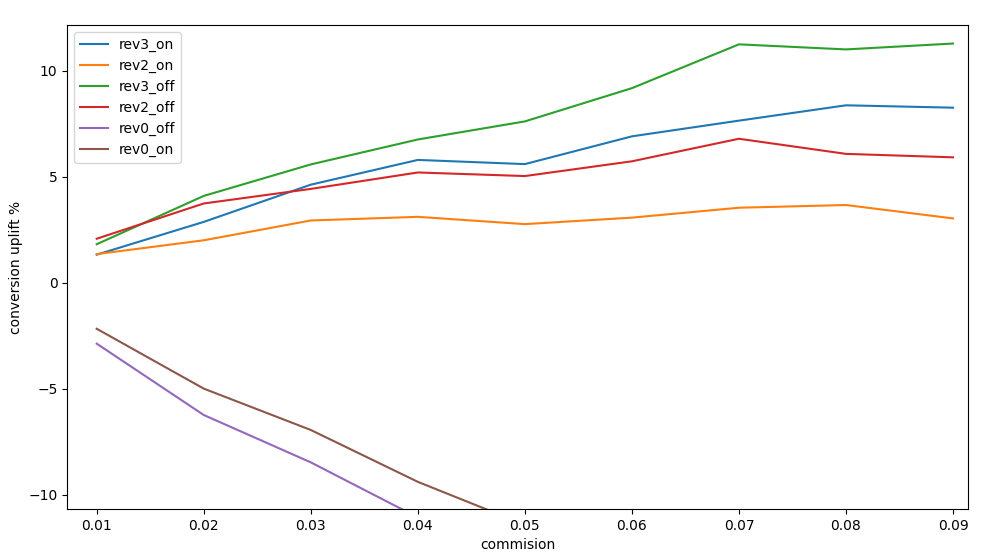

We have a close up on the revenue uplift for the average across all simulations

uplift due to the different strategies

uplift due to the different strategies

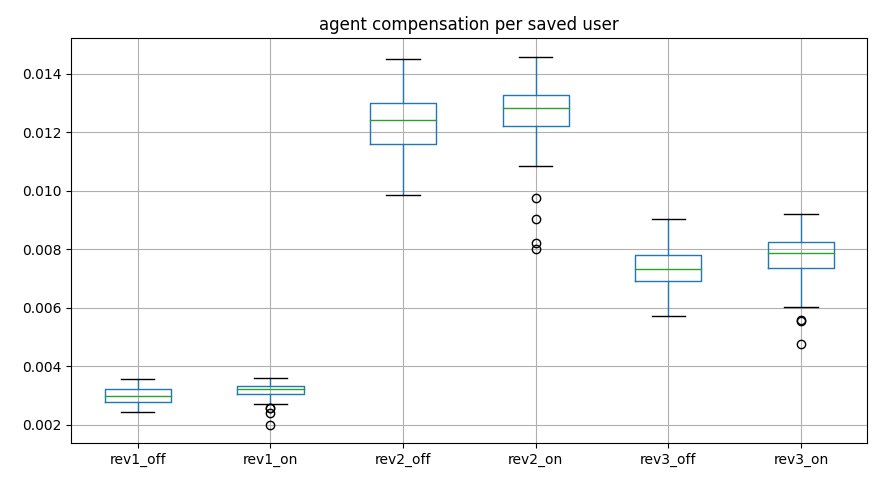

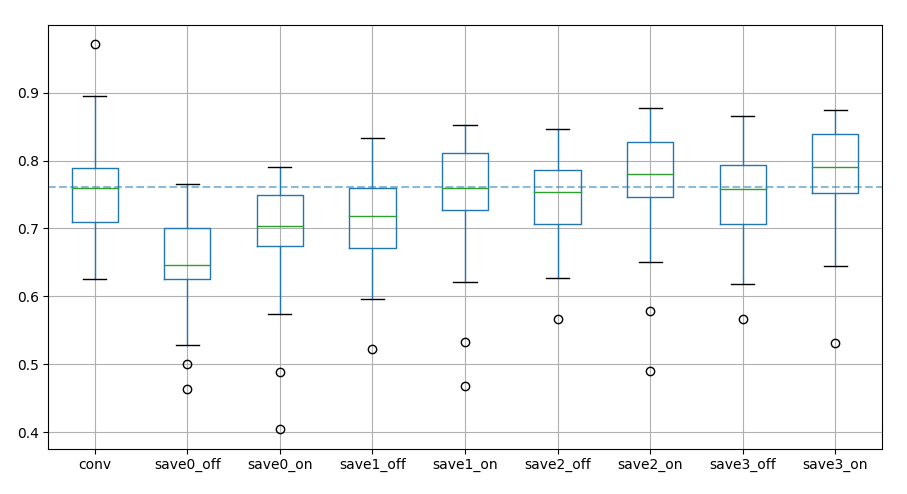

We want to make sure that the agent compensation is fairly distributed across all agents, and we see a clear increase in agent compensation depending on the strategy

compensation distribution across all agents

compensation distribution across all agents

We see that the company uplift is fairly distributed

distributions are not broader

distributions are not broader

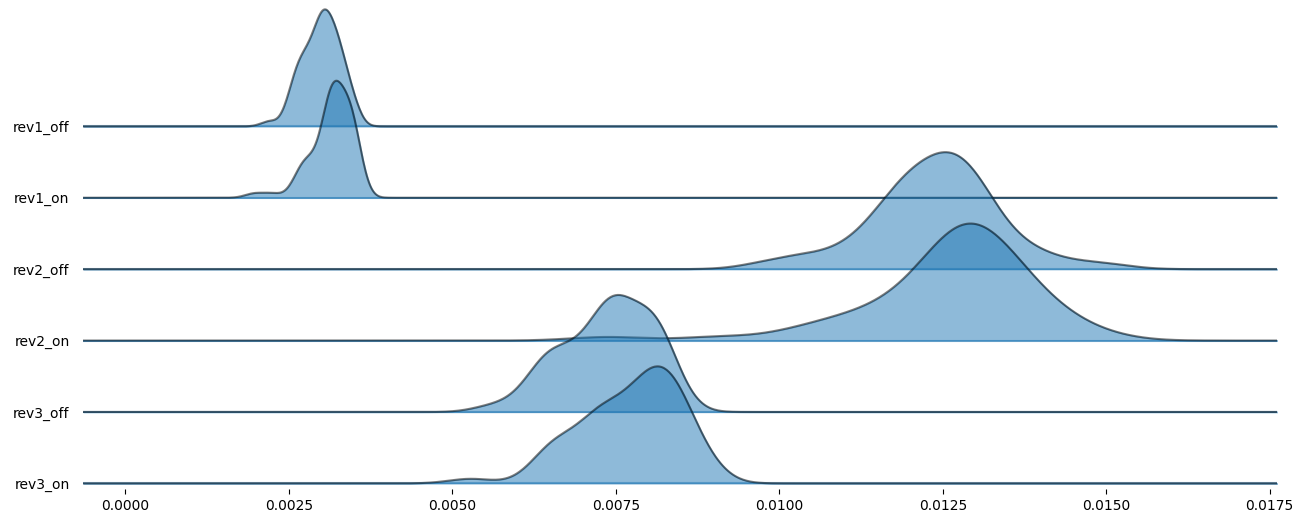

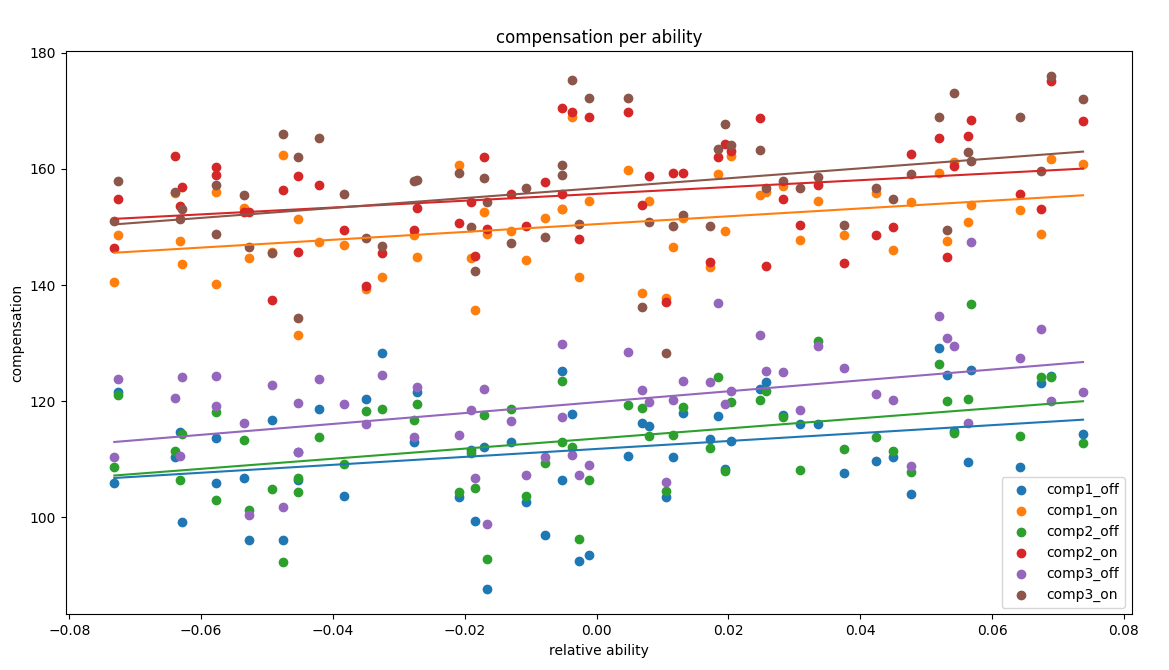

Finally we can show that the proposed compensation scheme still incentivize the individual ability

all the proposed methods increase the compensation together with the

agent ability

all the proposed methods increase the compensation together with the

agent ability

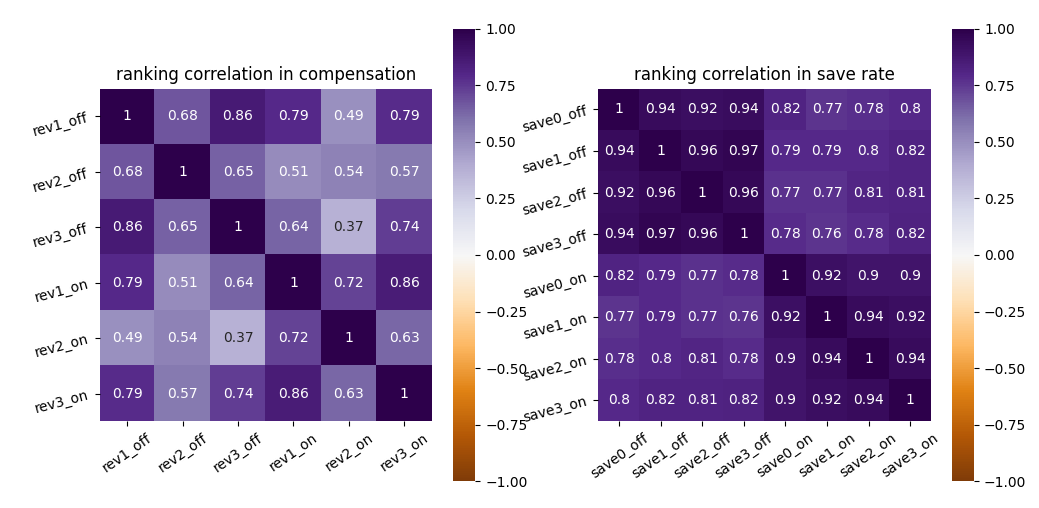

We show that the rank correlation on save rate is good across the different compensation, the pairing eingine is increasing the shuffling between agents. The compensation scheme increases shuffling between agents ranking

rank

correlation across different compensation models

rank

correlation across different compensation models

We have few parameters to tune:

What is a boost and how can we quantify the influenceability vs motivation of an agent? We can set a benchmark with completely unmotivated agents and use it as a baseline for modelling the commission scheme. We see that the agent compensation delta compared to save rate steadly increases

uplift in commission compared from the

current scheme

uplift in commission compared from the

current scheme

Using different methods we can define the targets modifying the save rate by the weighted save rate and maintain a similar reward process

save rate across differente compensation schemes

save rate across differente compensation schemes

We can predict the outcome of a call with a certain accuracy and use our predictive capabilities to shape different simulations.

The current compensation model is not motivating a win-win strategy for the company and the agent the current targets induce the agent to be as good as the others and don’t consider the risk of loosing an high valuable customer

We have simulated different scenarios and fine tune a compensation model to increase the customer retention, the company revenues and the agent personal reward.

We have shown that compensating an agent proportionally to the risk of the call or the customer value increases both company and personal goals.

We have shown as well that the increased revenue is fairly distributed and even less perfomant agent profit from a change in call distribution and strategy.

code : github project : github article : match article : stan article : stan