list of

activation functions

list of

activation functionsA neural network are layers of neurons connected by activation functions that transform an input into an output. In between there are different hidden layers which build the logic between input and outputs. The prediction is evaluated via a score function whose result is used to build an optimizer that backpropagates along the network to update neurons weights and minimize the consecutive error.

To avoid biases data is split into train, test and validate blocks. The train test is the one used to compute the networks weights, the validation set is used to compute the unbiased score of the model and to tune hyperparamenters, the test set is used to asses the final score of the model since this data hasen’t been used before.

The flow of information is in the forward direction, supervised without feedback.

We take a matrix X on nxm dimensions and a prediction variable y of k elements. We select a weight vector w1 with l1 entries and a ReLU function $g_1: ^l ^l $ so that

(g1(y)) = maxyi, 0 z1 = g1(w1β+b)

The second and third layers have:

z2 = g2(w2z1+b2) z3 = g3(w3z2+b3)

and finally the predicted variable:

$$ \hat y_i = softmax_i(z_3) = \frac{e^{(z_3)_i}}{\sum_j e^{(z_3)_i}} $$

We have therefore to set the parameters: w1, w2, w3, b1, b2, b3

Usually layers used in a network are:

Flatten From matrix to array

dense(16) + input(5,3) = output(5,16)

input(5,3) + flatten() = input(15) + dense(16) = output(16)

The total number of examples in a training iteration

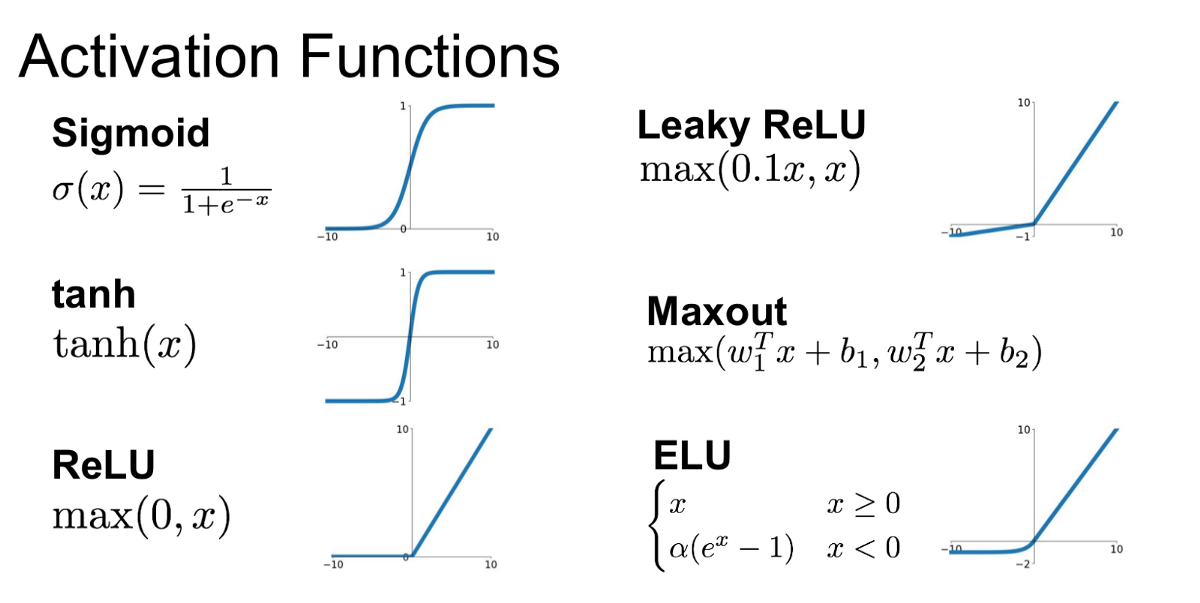

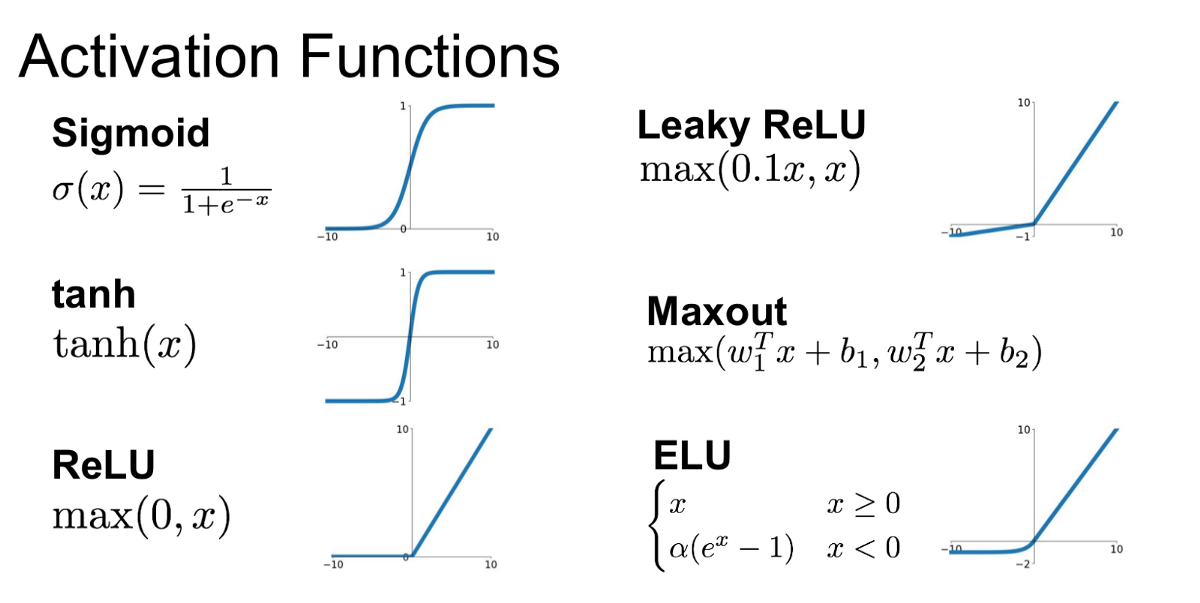

Activation functions are used to connect neurons between networks. Usually the activation functions is constant througout the hidden layer and might vary at the output layer. The main reason to select an activation function for the output layer is the domain of the output variable:

A key feature for the activation function is derivability since backpropagation requires at least the first derivate of the function.

list of

activation functions

list of

activation functions

Sigmoid / Logistic Advantages

Smooth gradient, preventing “jumps” in output values. Output values bound between 0 and 1, normalizing the output of each neuron. Clear predictions—For X above 2 or below -2, tends to bring the Y value (the prediction) to the edge of the curve, very close to 1 or 0. This enables clear predictions.

Disadvantages

Vanishing gradient—for very high or very low values of X, there is almost no change to the prediction, causing a vanishing gradient problem. This can result in the network refusing to learn further, or being too slow to reach an accurate prediction. Outputs not zero centered. Computationally expensive

TanH / Hyperbolic Tangent Advantages

Zero centered—making it easier to model inputs that have strongly negative, neutral, and strongly positive values. Otherwise like the Sigmoid function.

Disadvantages

Like the Sigmoid function

ReLU (Rectified Linear Unit) Advantages

Computationally efficient—allows the network to converge very quickly Non-linear—although it looks like a linear function, ReLU has a derivative function and allows for backpropagation

Disadvantages

The Dying ReLU problem—when inputs approach zero, or are negative, the gradient of the function becomes zero, the network cannot perform backpropagation and cannot learn.

Leaky ReLU Advantages

Prevents dying ReLU problem—this variation of ReLU has a small positive slope in the negative area, so it does enable backpropagation, even for negative input values Otherwise like ReLU

Disadvantages

Results not consistent—leaky ReLU does not provide consistent predictions for negative input values.

Parametric ReLU Advantages

Allows the negative slope to be learned—unlike leaky ReLU, this function provides the slope of the negative part of the function as an argument. It is, therefore, possible to perform backpropagation and learn the most appropriate value of α. Otherwise like ReLU

Disadvantages

May perform differently for different problems.

Softmax ex/sum(ex) which resemples Boltzmann probability Advantages

Able to handle multiple classes only one class in other activation functions—normalizes the outputs for each class between 0 and 1, and divides by their sum, giving the probability of the input value being in a specific class. Useful for output neurons—typically Softmax is used only for the output layer, for neural networks that need to classify inputs into multiple categories.

Swish

Swish is a new, self-gated activation function discovered by researchers at Google. According to their paper, it performs better than ReLU with a similar level of computational efficiency. In experiments on ImageNet with identical models running ReLU and Swish, the new function achieved top -1 classification accuracy 0.6-0.9% higher.

A function that evaluates the error between the fit function and the variable to predict

Squared error (regressions)

F(x,y) = ∑i(yi−wix)2/N

Logistic function

F(x,y) = ∑ilog(1+e−yi(wiTx+bi))

cross entropy loss (regressions)

Gini impurity

Hinge loss (support vector machine)

Exponential loss (ada boost)

To evaluate the quality of a model different scores are use to evaluate the predicitons

Backpropagation is when after running a prediction the error/cost is evaluated. The cost function is derived using an optimizer which runs trhough the neurons of the network changing the neurons weights to minimize the next iteration error.

The optimizer suggests the direction for the minimization of the cost function

minxF(x) := ∑ifi(x)

Minimize the cost function f(x,y), first order optimizers use the gradient, the second the Hessian.

gradient

Hessian $$ H^2 := \sum_{j} \gradient f_{ij} (x_j) \gradient f_{ij} (x_j)^T $$

Preconditioner B := diagH

gradient descent

stochastic gradient descent

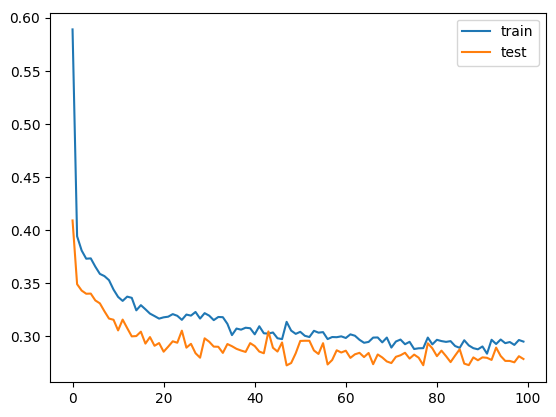

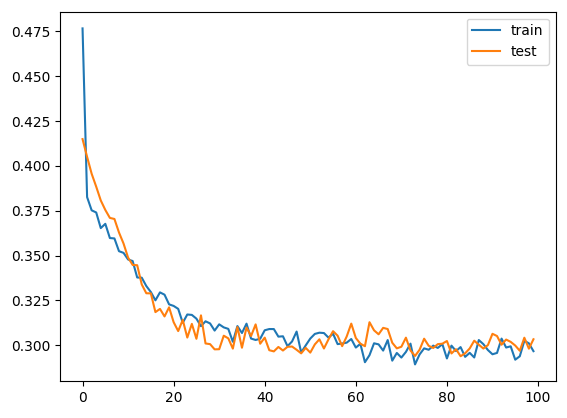

Models can have good scores but the solver might be still too inaccurate for the complexity of the problem (over-simplifying over-complicating). The two cases are:

The usual practice is to adapt the number of parameters to the complexity of the problem but there are other regularisation techniques to use.

We can use the weight decay where the square of the weights are considered in the loss function.

We can use dropouts which deactives a portion of the neurons to let the optimizer practically modify the architecture.

Regularisation adds penality to model complexity to decrease overfitting problems.

The main types of regularizations are:

L1: ∑i(yi−∑jxijβj)2 + λ∑j|βj|

L2: ∑i(yi−∑jxijβj)2 + λ∑jβj2

L1 is shrinks the least important features to zero and it’s suited for feature selection. λ is an hyperparameter to tune. L1 gradient is independent of parameters and therefore their weight can vanish.

Ridge regression is useful when there are few data points compared to features and helps in reducing feature significance.

Cuts connections when the weight is low, helps against overfitting.

Binary classification

Not performant, dropout 20:

Just about right, dropout 30:

Overfitting, dropout 50:

Some models and algorithms are useful to increase the dataset for extended training purposes data augmentation.

A classical example concerning computer vision is to iterate all available images and make copies after rotation, subset, filtering of those to increase the cases of non-standard takes.

During training batches can be normalized in case a sampling of the dataset can create big distortions in the distribution of min/max values. More on batch normalization

A separated article on GANs showing how the adversarial architecture helps creating realistic fakes and some keras implementations.

Transformes use an encode-decode architecture to reduce the input parameter space. The most significant change compared to previous architectures are the attention layers and the enhanced emmbeddings.